10. Feasible-Point Methods

- -

Introduction

In this article, we examine methods that solve constrained optimization problems by attempting to remain feasible at every iteration. If all the constraints are linear, maintaining feasibility is straightforward. However, when non-linear constraints are present, then more elaborate procedures are required. Although it strives to maintain feasibility at every iteration, they do not always achieve this.

Active-set Methods

subject to

- Bad news : It can’t be reformulated into unconstrained optimization.

- Good news : We have methods for equality constraints.

How can we resolve this issue? A brute force approach would be to solve the equality-constrained problem for all possible selections of active constraints, and then choose the best solution. However, this approach can’t be applicable even though the number of constraints are quite small.

Active-set methods attempt to overcome this difficulty by moving sequentially from one choice of active constraints to another choice that is guaranteed to produce at least as good a solution.

The most commonly used active-set methods are feasible-point methods. An initial feasible point, if none is provided, can be obtained by using linear-programming. At each iteration of the active-set method, we select a working set of constraints that are assumed to be active at the optimum.

linear inequality constraints.Algorithm

- Initialize with some working set W of active constraints

- Iterate

- Optimality check

- If no constraints are active and the current point is an unconstrained stationary point, then stop

- If there are active constraints and the current point is optimal with respect to the active constraints, compute the Lagrange multipliers

- If the multipliers are non-negative, stop. Otherwise, drop a constraint with negative multiplier from W.💡즉, multiplier가 전부 다 양수라는 의미는 gradient 반대 방향이 interior로 절대로 들어오지 않는다는 의미이다. (constraint를 ≥로 잡은 상태이므로 이렇게 해석할 수 있다.). 이 의미는 active constraint에 대해서 optimal이고 interior point로 들어가는 순간 함숫값이 커진다는 의미이므로 local optimum이라고 단정할 수 있는 것이다.

- Search direction

- Determine a descent direction p that is feasible with respect to W

- Step length

- Determine a satisfying f(xk+αp)<f(xk) and α≤αˉ where αˉ is the maximum possible step length along p

- Update

- xk+1=xk+αp. If new constraint boundary encountered, add to W.

- Optimality check

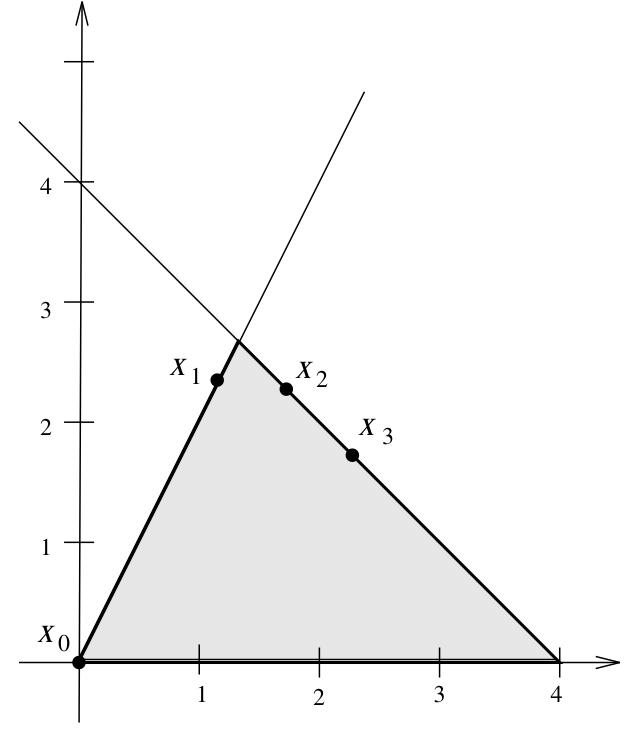

Example

subject to

when starting at x1=0 and x2=0

- Active constraints : 1, 3

- Find λ1,λ2 such that∇f(0,0)=λ1∇g1(0,0)+λ3∇g3(0,0)⇒[−3−4]=λ1[2−1]+λ2[01]⇒λ1=−23,λ2=−211

- Since λ1,λ2<0, the current point is not a optimal point.

- Drop x2≥0 constraint:xmin21(x1−3)2+(x2−2)2

subject to

2x1−x2=0

- Find the null spaceA=[2−1]

Therefore,

N(A)=sp{(1,2)}💡Null space 방향을 search direction으로 설정

- Take(x1,x2)=(0,0)+δ(1,2)

- Solveδmin21(δ−3)2+(2δ−2)2⇒δ=911⇒x1=911,x2=922

Since, x1,x2 doesn’t violate the other constraints, it is in the feasible reasion.

- Check the optimality condition again∇f(911,922)=λ1∇g1(911,922)[−91698]=λ1[2−1]⇒λ1=−98

→ It is also not a optimal point. So we also drop this constraint also

- We are now left with

noactive constraints, which means that the problem is locally unconstrained. Therefore, reduced gradient is simply the unconstrained Newton directionp=−∇2f(x1)−1∇f(x1)

- Calculate the largest feasible step by considering the given constraints

- Iterate above procedures

Sequential Quadratic Programming (SQP)

Sequential quadratic programming is a popular and successful technique for solving non-linearly constrained problems such as

subject to

The main idea is to obtain a search direction by solving a quadratic program, that is a problem with a quadratic objective function and linear constraints.

Idea

The Lagrangian for this problem is

and the first-order optimality condition is

Then the formula for Newton’s method is

where pk and vk are obtained as the solution to the linear system

Consider the following the constrained minimization problem where (xk,λk) is our Newton iteration at step k

subject to

quadratic in pThis minimization problem has Lagrangian

Note that the first optimality condition for above Lagrangian is

which are equivalent to the original first-optimality condition. Then solving ∇Lk(p,vk)=0 gives a linear system, which is the same as the k’th Newton step of the original problem.

The quadratic function is a Taylor series approximation to the Lagrangian at (xk,λk), and the constraints are a linear approximation to g(xk+pk)=0

Issue

SQP is an important method, and there are many issues to be considered to obtain an efficient and reliable implementation

- Efficient solution of the lienar system at each Newton iteration

→ block matrix structure can be exploited

- Quasi-Newton approximations to the ∇xx2L(xk,λk) as in the unconstrained case

- Trust region, line search to improve robustness

- Treatment of constraints (equality and inequality) during the iterative process

- Selection of good starting guess for λ

소중한 공감 감사합니다