11. Penalty and Barrier Methods

- -

Introduction

In the last article, we discussed the use of feasible-point methods for solving constrained optimization problems. These methods are based on minimizing the Lagrangian function while attempting to attain and maintain feasibility. When inequality constraints are present, these methods solve a sequence of subproblems with a changing active set until a solution to the original constrained problem is found.

However, there are some major disadvantages to this approach.

- As the number of constraints increases, the number of potential subproblems.

- The idea of keeping the constraints satisfied exactly, although easily achieved in the case of linear constraints, is much more difficult to accomplish in the case of nonlinear constraints.

Some of these disadvantages can be removed by using penalization methods. These methods solve a constrained optimization problem by solving a sequence of unconstrained optimization problems. The hope is that in the limit, the solutions of the unconstrained problems will converge to the solution of the constrained problem.

In contrast to active-set methods, this approach takes into account all constraints, and thus the difficulties of guessing a correct active set are avoided. Further, since penalization techniques don’t attempt to keep the constraints satisfied exactly, they can be more suitable for handling non-linear constraints.

Although penalization methods ameliorate some of the difficulties, they introduce difficulties of their own. In particular, it can give rise to ill-conditioning problems.

Classical Penalty and Barrier Methods

The general class of penalization methods includes two groups of methods:

- penalty methods: imposes a penalty for

violatinga constraint

- barrier methods: imposes a penalty for

reaching the boundaryof an inequality constraint

Suppose that our problem is given in the form:

subject to

where S is the set of feasible points.

Define

The function σ can be considered as an infinite penalty for violating feasibility. Hence the constrained problem can be transformed into an equivalent unconstrained problem in the form:

However, this is not a practical idea, since the objective function of the unconstrained minimization is not defined outside the feasible region. Moreover, it makes a discontinuities within the boundaries.

Therefore, barrier and penalty methods solve a sequence of unconstrained subproblems that are more manageable, and that gradually approximate the infinite penalty function.

Barrier Methods

Consider the nonlinear inequality constrained problem

subject to

Barrier methods use a barrier term that approaches the infinite penalty function σ. Let ϕ(x) be a function that is continuous on the interior of the feasible set, and that becomes unbounded as the boundary of the set is approached from its interior:

There are two examples:

- Logarithmic functionϕ(x)=−i=1∑mlog(gi(x))

- Inverse functionϕ(x)=i=1∑mgi(x)1

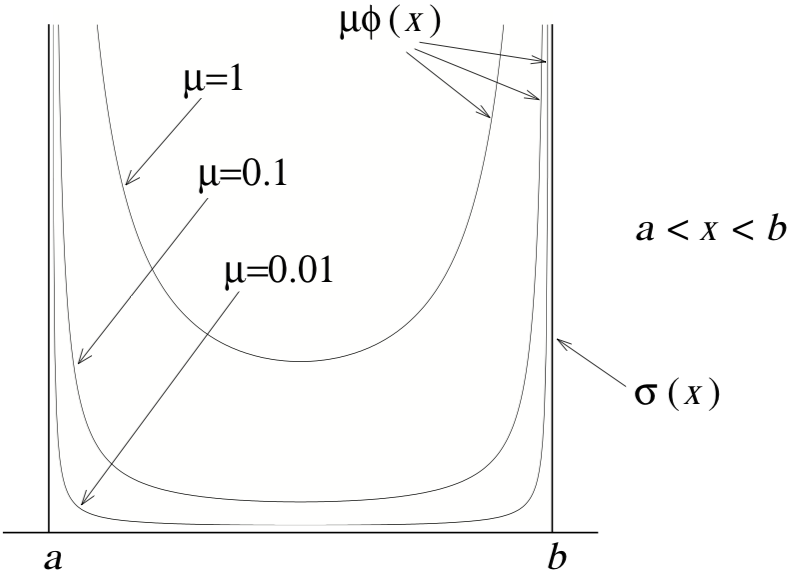

Now let μ be a positive scalar. Then μϕ(x) will approach σ(x) as μ approaches zero.

By adding a barrier term of the form μϕ(x) to the objective, we obtain a barrier function

where μ is referred to as the barrier parameter

Barrier methods solve a sequence of unconstrained minimization problem of the form

for a sequence {μk} of positive barrier parameters that decrease monotonically to zero.

Why solve a sequence of problems? The reason is that when the barrier parameter is small, the discontinuous at a and b makes a problem. For this reason we start with larger values of the barrier parameter. If μ is decreased gradually, and if the solution of one unconstrained problem is used as the starting point of the next problem, these unconstrained minimization problems tend to be much easier to solve.

Penalty Methods

In contrast to barrier methods, penalty methods solve a sequence of unconstrained optimization problems whose solution is usually infeasible to the original constrained problem.

An advantage of penalty methods is that they don’t require the iterates to be strictly feasible. Thus, unlike barrier methods, they are suitable for problems with equality constraints.

Consider the equality constraint problem

subject to

where g(x) is a m-dimensional vector whose i’th component is gi(x)

The penalty for constraint violation will be a continuous function ψ

There are two examples:

- l2 loss function

- Another penalty funcitonψ(x)=γ1i=1∑m∣gi(x)∣γ

where γ≥1

The weight of the penalty is controlled by a positive penalty parameter ρ. As ρ increases, the function ρψ approaches to σ. We can define the penalty function as follows

The penalty method consists of solving a sequence of unconstrained minimization problems of the form

for an increasing sequence {ρk} of positive values tending to infinity.

However, it also has a problem

- same numerical issue as for barrier method when ρ is increased.

- f may be undefined for infeasible point (e.g. square root of a negative number)

What about inequality problem? Think about this case

subject to

In this case, we can use the penalty function by using

If

, the penalty value is 0.

However, if

, the penalty value is greater than 0.

소중한 공감 감사합니다