2. Optimality Conditions, Convexity, Newton’s Method for Equations

- -

Optimality Conditions: Preliminaries

- Local minima and maxima have one thing in common∇f(x)=0

First-order Necessary Condition

From now on f is differentiable and that its first and second derivatives are continuous for every x∈X where X is a domain of f

If x∗ is a local minimum, then

Second-order Conditions

If x∗ is a local minimum, then ∇2f(x∗) is positive semi-definite

Proof

But you should note that even though ∇f(x∗)=0 and ∇2f(x∗)≥0, we can’t be sure that x∗ must be a local minimum.

Think about,

That means there is no sufficient and necessary condition in general

Second-order sufficient condition

It is sufficient to guarantee that x∗ is a local minimizer. If

then x∗ is a strict local minimizer of f.

Proof

Since ∇f(x∗)=0,

f(x∗+p)=f(x∗)+21vT∇2f(ζ)vwhere ζ∈Np(x∗)

Since ∇2f is continuous, ∃δ such that

∥ζ−x∗∥<δ⇒∥∇2f(ζ)−∇2f(x∗)∥<21vT∇2f(x∗)vTake v=min(1,δ)

💡Note that ζ∈Nv(x∗)Moreover, since ∇2f(x∗) is positive definite,

vT∇2f(x∗)v>0,∀v(v=0)vT∇2f(x∗)v−vT∇2f(ζ)v=vT(∇2f(x∗)−∇2f(ζ))v≤∥v∥∥(∇2f(x∗)−∇2f(ζ))v∥≤∥v∥∥∇2f(x∗)−∇2f(ζ)∥∥v∥≤∥v∥2∥∇2f(x∗)−∇2f(ζ)∥≤21vT∇2f(x∗)v💡1) bi-linearity of inner-product2) Cauchy-schwarz inequality

3) defi

4) Associativity of multiplication in R

Therefore,

0<21vT∇f(x∗)v≤vT∇2f(ζ)vThis means that ∇2f(ζ) is also positive definite. So, x∗ is a local minimum.

💡Since v has a restriction on its domain, wecan notguarantee that x∗ is a global minimum.

Convexity

- A set S is

convexif any x∈S and y∈S,

- A function f is

convexon a convex set S if for any x∈S and y∈S,f(αx+(1−α)y)≤αf(x)+(1−α)f(y),∀α∈[0,1]

- A minimization problem is

convexif f is convex and S is convex

Implication of Convexity

For a convex optimization problem, a local optimum x∗ is also a global optimum

If the feasible region is convex, it implies that all the points along the line segment between the current solution and the new feasible solution are also feasible.

Therefore, any smaller step size will still allow us to move along the same direction from the current solution, while remaining within the feasible region. This is because the convexity property guarantees that the entire line segment between the current solution and the new feasible solution lies within the feasible region.

This property is valuable in optimization algorithms, as it ensures that if we have found a feasible solution, we can always refine it further by taking smaller steps while maintaining feasibility. It provides flexibility in adjusting the step size and allows for a more precise exploration of the feasible region.

How to recognize convexity?

- One dimension case : A function f is convex if and only if f′′(x)≥0 for all x∈X where X is a domain of f

- Multiple dimension case

- f is convex if and only if Hessian ∇2f(x)≥0 for all x∈X where X is a domain of f

- If ∇2f(x) is positive definite for all x∈X (i.e. ∇2f(x)>0,∀x∈X), then f is strictly convex

→ Note that it is not true for opposite direction

Convexity and Optimality Condition

Actually if f is convex, local optimum and global optimum is equivalent. In addition,

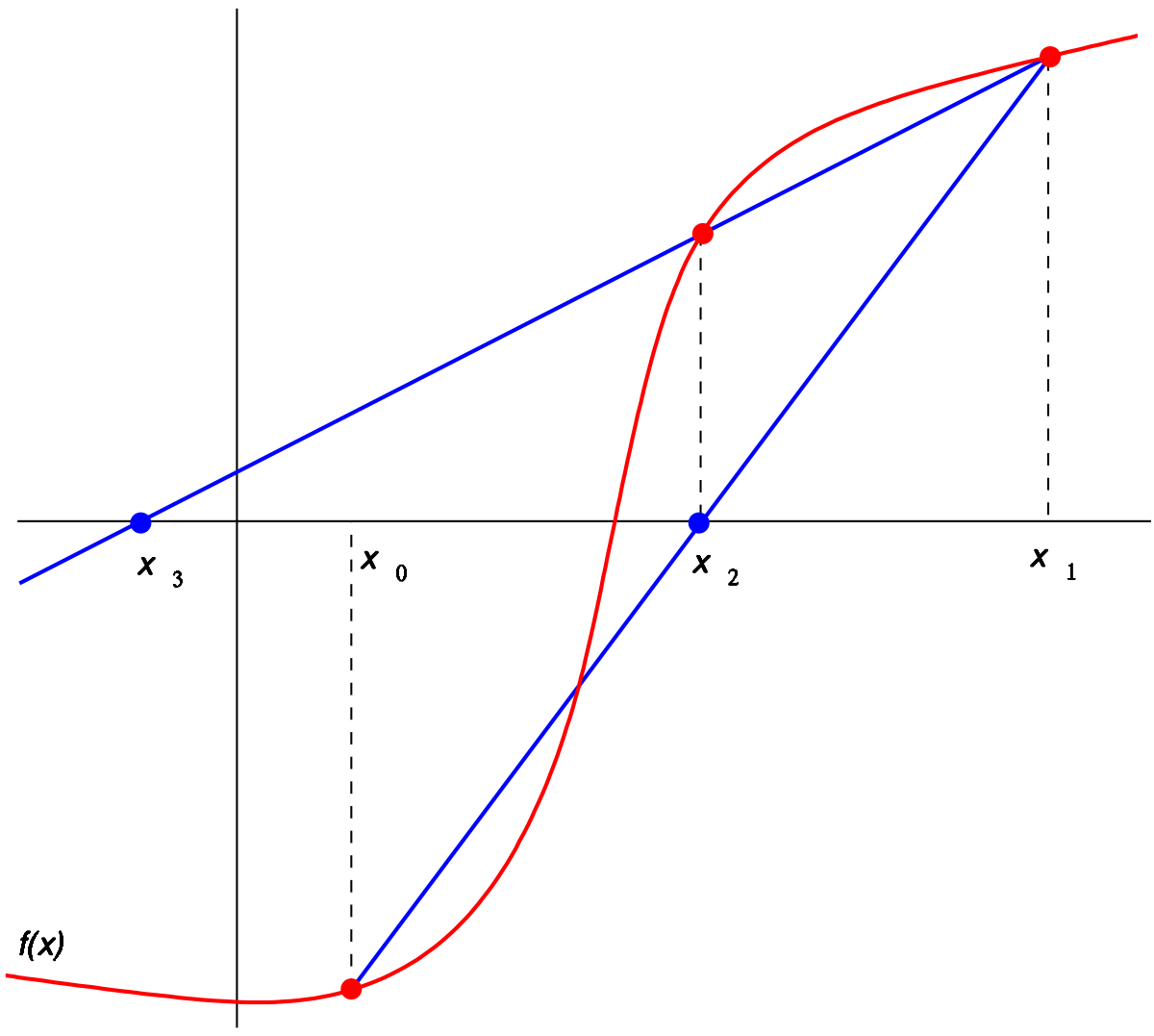

Newton’s Method for Nonlinear Equations

- A sequence of points as approximate solutions

- The sequence converges if it approaches a solution of the equation

Especially for one dimension,

f(xk+p)≈f(xk)+pf′(xk)This means that

f(xk)+pf′(xk)=0→p=−f′(xk)f(xk)Therefore,

xk+1=xk−f′(xk)f(xk)- This is the

Newton-Raphsonmethod

Newton's methodIn numerical analysis, Newton's method, also known as the Newton–Raphson method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots (or zeroes) of a real-valued function. The most basic version starts with a real-valued function f, its derivative f′, and an initial guess x0 for a root of f. If f satisfies certain assumptions and the initial guess is close, then

Newton's methodIn numerical analysis, Newton's method, also known as the Newton–Raphson method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots (or zeroes) of a real-valued function. The most basic version starts with a real-valued function f, its derivative f′, and an initial guess x0 for a root of f. If f satisfies certain assumptions and the initial guess is close, then https://en.wikipedia.org/wiki/Newton's_method

https://en.wikipedia.org/wiki/Newton's_method - This is the

- A related derivative-free method is the

secant method xk+1=xk−f(xk)f(xk)−f(xk−1)xk−xk−1💡It can be viewed as approximate of the derivative by using average rate of change.Secant methodIn numerical analysis, the secant method is a root-finding algorithm that uses a succession of roots of secant lines to better approximate a root of a function f. The secant method can be thought of as a finite-difference approximation of Newton's method. However, the secant method predates Newton's method by over 3000 years.

xk+1=xk−f(xk)f(xk)−f(xk−1)xk−xk−1💡It can be viewed as approximate of the derivative by using average rate of change.Secant methodIn numerical analysis, the secant method is a root-finding algorithm that uses a succession of roots of secant lines to better approximate a root of a function f. The secant method can be thought of as a finite-difference approximation of Newton's method. However, the secant method predates Newton's method by over 3000 years. https://en.wikipedia.org/wiki/Secant_method

https://en.wikipedia.org/wiki/Secant_method

- Convergent or not: depends on the

functionand theinitial point

Newton’s Method: Multiple Dimensions

Let g:Rn→Rn

That means

if ∇g(xk) is invertible

Therefore,

소중한 공감 감사합니다