A Generic Optimization Model

minf(x1,x2,…,xn) subject to

g1(x1,x2,…,xn)≤b1g2(x1,x2,…,xn)≤b2⋮gm(x1,x2,…,xn)≤bm In compact form

where x∈S (S : the set of all feasible solutions permitted by the constraints)

Assumptions

- Continuous variables, smooth functions (twice continuously differentiable unless stated otherwise)

- Linear programming (LP) and Non-linear programming (NLP)

- What do we mean by solving the problem?

- NLP : hard in general; we focus on a

local optimum

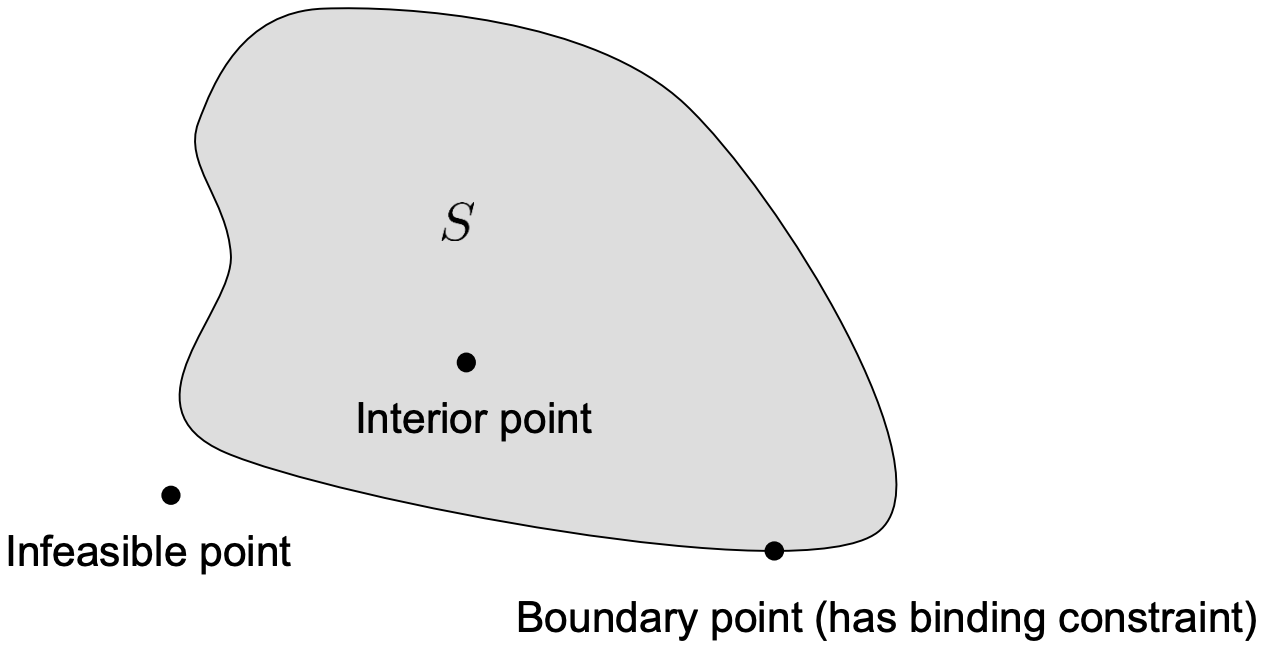

Boundary and Interior

- Boundary of S : formed by all feasible points with

at least one binding constraint; binding = an inequality is satisfied as equality

- Interior of S : the rest of S, or all feasible points without any binding constraint

Global and Local Optima

- x∗ is a global minimum if f(x∗)≤f(x),∀x∈S

- x∗ is a local minimum if f(x∗)≤f(x),∀x∈S such that d(x∗,x)≤ϵ

In general, it is difficult to find the global minimum.

Therefore, we will focus on finding a local minimum in nonlinear optimization problems.

How to Solve it?

- Take a sequence of steps to improve the objective function

- Convergence : A point that is locally optimal

General Algorithm

- Specify some initial guess of the solution x0

- For k = 0, 1, …

- If xk is locally optimal, stop

- Determine a search direction pk

- Determine a step length αk, such that xk+1=xk+αkpk is a better solution than xk

💡

Technically, we have to consider about the shape at the point.

Descent Direction

- It is desirable to have descent direction as our search direction

- Formal definition

A direction p of function fat point x is a descent direction, if there exists ϵ such that f(x+αp)<f(x),0<α≤ϵ

Note

Any direction p less than 90∘ from −∇f(x) is a descent direction

Hessian

Second-order derivative of a function f in several variables is called the Hessian

∇2f=∂x12∂2f∂x2∂x1∂2f⋮∂xn∂x1∂2f∂x1∂x2∂2f∂x22∂2f⋮∂xn∂x2∂2f⋯⋯⋮⋯∂x1∂xn∂2f∂x2∂xn∂2f⋮∂xn2∂2f The Hessian is a square and symmetric matrix

It is useful for optimality check, and function approximation.

Taylor Series

- One dimension

f(x0+p)≈f(x0)+pf′(x0)+21p2f′′(x0)+⋯

- Multiple dimensions

f(x0+p)≈f(x0)+pTf′(x0)+21pTf′′(x0)p+⋯

What about approximating f at point x0 with f(x0)+pT∇f(x0) and find the best possible p for the approximation?

pminf(x0)+pT∇f(x0) What about adding one more term in the approximation?

pminf(x0)+pT∇f(x0)+21pT∇2f(x0)p Sensitivity (Conditioning)

- There may be errors in the input data

- Also, data calculations are not completely accurate by computers

- Intuitively, we have an ill-conditioned problem if the solution is very sensitive to the change of data values

cond(A)=∥A∥⋅∥A−1∥ 💡

A singular matrix has condition number

∞.