6. Bayesian Networks

- -

Definition

Bayesian networks are networks of random variables

→ Each variable is associated with a node in the network

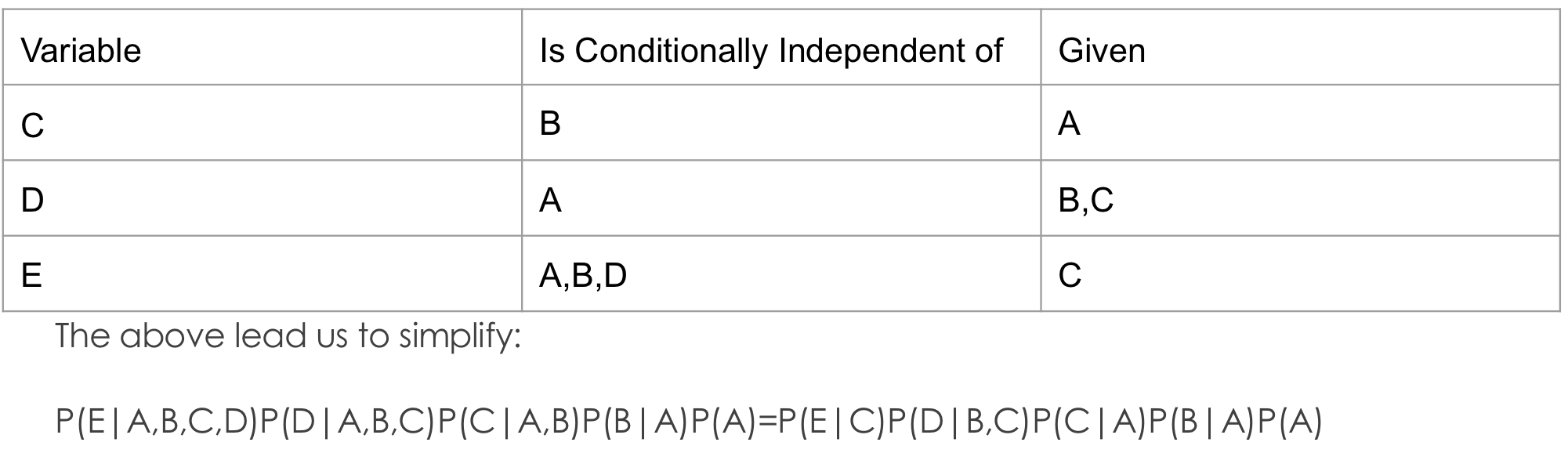

If we know of the existence of conditional independencies between variables we can simplify the network by removing edges

This leads to the simplified network

Causal Networks

While correlation (association) between variables is an important observation in data analysis, it does not provide evidence for establishing causal relationships.

→ Correlation doesn’t imply causation

Dynamic Bayesian Networks

Bayesian networks can be dynamic . In fact, a HMM(Hidden Markov model) is a very simple dynamic Bayesian network.

Fitting a Bayesian Network to Data

We could model this with any sort of statistical model we like.

→ 수업에서는 categorical distribution 을 사용함

Example

Markov Chain Monte Carlo (MCMC) Sampling

General Idea

We can estimate the probability distributions of any set of unknown variables given any set of observed variables. However, generally approximate inference is performed using sampling.

- Basic Idea

- Assume we want to estimate the distribution P

- Set up a Markov chain whose long run distribution is P

- Evolve the Markov chain to generate samples

→ As the number of samples approaches infinity, the sample distribution approaches P

- Use these samples to estimate P

- Approach

- Gibbs Sampler

- Metropolis

- Metropolis within Gibbs

- Aim : Given a set of known (or assumed) variables, E , estimate the probability distributions of the remaining unknown variables, U

- Algorithm

- Initialization

- Perform a topological sort on the graph

- Set all variables in E to their known/assumed values

- Randomly assign values to each variables in U

💡Topological sort를 하는 이유는 해당 정렬을 하게 되면 한 변수가 다른 변수에 의존하는 경우 그 의존하는 변수가 먼저 오게 된다는 것을 반드시 보장할 수 있기 때문이다.

- Generate n samples (done differently in each algorithm)

- Use these samples to estimate desired distributions

- Initialization

We can’t know that a run of MCMC samples has succeeded in converging to the desired distribution

- Common to perform multiple runs, from different initial origins

- If these converges with each other, we hope all have converged with the underlying distribution

→ But degenerate cases is possible : 즉 대상 분포가 아닌 다른 어떤 분포에 수렴하거나 chain 중 일부만 target distribution에 수렴하지 않을 수 있다는 의미이다.

Gibbs Sampling

The basic idea of Gibbs Sampling is to sequentially update each variable according to its conditional probability distribution, given the current values of all other variables.

Here are the basic steps in the Gibbs Sampling algorithm:

- Initialization: Set initial values for all variables randomly.

- Iteration: At each iteration step, select one variable and update it while keeping all other variables fixed. This variable is updated according to its conditional probability distribution given the current values of all other variables.

- Convergence: After enough iterations, the generated samples will approximate the target multivariate probability distribution.

Gibbs Sampling allows you to draw samples from complex multivariate distributions if you only know each variable's conditional distributions given others' current values - making it widely used in fields like Bayesian statistics and machine learning.

Note : The Markov blanket of a node in a Bayesian network consists of the node’s parents, its children, and co-parents of its children.

한 변수를 고르고 나머지를 고정시킨 상태로 update를 진행한다는 점이다. 반면 Metropolis의 경우 한번에 업데이트를 진행하게 된다.Metropolis

The Metropolis algorithm is a type of Markov Chain Monte Carlo method used for sampling for complex probability distributions

Here’s the basic working principle of the Metropolis algorithm:

- Initialization : Choose an

arbitrarystarting point

- Proposal : From you current position, propose a new point using a

proposal distribution. This proposal distribution is often something symmetric like a normal distribution, but it doesn’t have to be

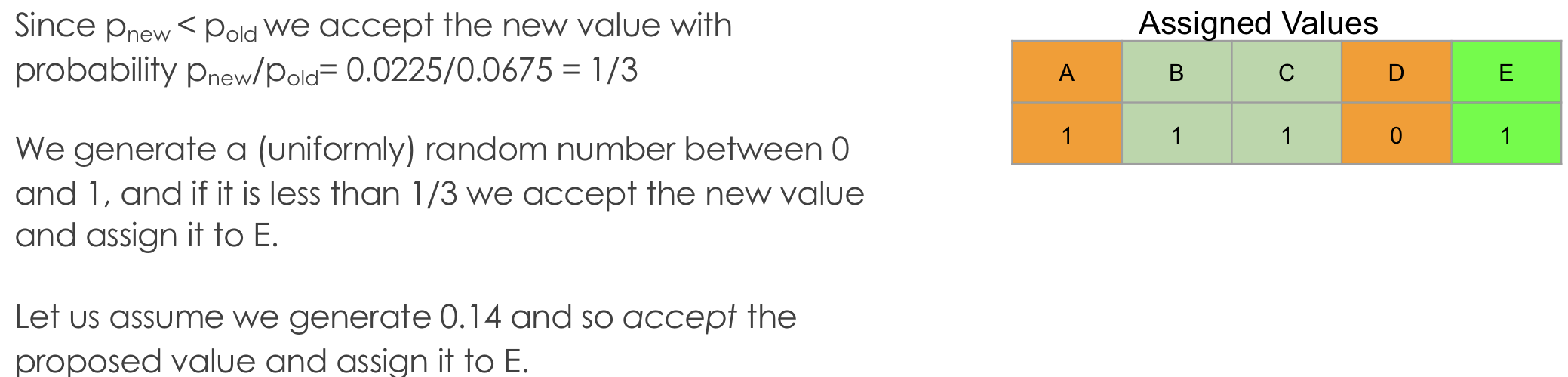

- Acceptance/Rejection : Calculate the probability values of the new and current points under your target probability distribution and decide whether to accept or reject the new point.

→ If the new point has higher probability than your current one, you always accept it. if not, you accept it with a probability proportional to the ratio of their probabilities under your target distribution. (p:min(1,pnew/pold))

- Iteration : Repeat this process many times to generate a Markov chain

After enough time has passed, this generated Markov chain will follow your target probability distribution. One reason why the Metropolis algorithm is widely used is its flexibility in application. It can be applied even when your objective function isn’t normalized or directly computable over all space.

Q : What is the different between Metropolis Algorithm and Gibbs Sampling

Both the Metropolis algorithm (and its generalized version, the Metropolis-Hastings algorithm) and the Gibbs sampling algorithm are types of Markov Chain Monte Carlo (MCMC) methods that make use of information about conditional distributions. However, they do so in different ways.

- Metropolis-Hastings Algorithm: This algorithm does not explicitly require knowledge of conditional distributions. Rather, it needs to know the target distribution up to a constant of proportionality. Given a current state in the Markov chain, it proposes a new state according to some proposal distribution and then decides whether to accept this new state or stay in the current state based on an acceptance probability that involves ratios of densities from the target distribution.

- Gibbs Sampling: This is a special case of Metropolis-Hastings where we specifically use full conditional distributions for proposing new states in our Markov chain. In Gibbs sampling, we cycle through each dimension (or variable) of our multivariate distribution one at a time and sample from its full conditional distribution while keeping all other dimensions fixed at their current values.

So while both algorithms utilize information about distributions associated with our target multivariate distribution, they do so differently: Metropolis-Hastings can operate with just knowledge about unnormalized densities from our target distribution while Gibbs sampling requires explicit knowledge about full conditional distributions.

Note : Care must be taken with the proposal function such that a reasonable acceptance rate is maintained. This can be difficult with a large number of variables.

→ In such a case, Metropolis in Gibbs may be a better alternative.

Metropolis in Gibbs

It basically uses a Metropolis step within a Gibbs sampler. This is sometimes necessary when it’s difficult or impossible to sample directly from the full conditional distributions required for a pure Gibbs Sampler.

Here’s the basic working principle of the Metropolis in Gibbs algorithm:

- Initialization : Choose an initial state for all variables in your model

- Iteration : For each iteration of the algorithm, do the following

- Fix the values of all other variables at their current states

- Sample a candidate value for the current variable from its proposal distribution

- Calculate an acceptance probability for old variable and new variable

- Assign the value with probability p:min(1,pnew/pold). Otherwise it remains assigned the old value

- Record the assigned values of all variables as a sample

It is often easier to maintain a reasonable acceptance rate in Metropolis in Gibbs than in the pure Metropolis algorithm.

The price of this is that all individual steps are linear on the unknown variables, and so sometimes the Metropolis in Gibbs random walk will have difficulty crossing from on area of high probability to another. This can be a problem if the distribution being estimated is multimodal (more than one peak/mode)

개별적으로 순차적으로 업데이트 한다는 것이다. Example

Burn period

Since we arbitrarily assign initial value, initial samples are often biased. It is best to discard the first n samples. This is called the burn period

- This bias will eventually be overcome. But if we don’t have a burn period, it requires more sample

- You

shouldalways use a burn period

Thinning

Sampling is most efficient if the samples are uncorrelated. Since MCMC samples are very correlated, so we want to reduce its correlation by only keeping every 1 in n samples. This is called thinning

- Thinning is useful only if the improvement obtained by somewhat de-correlating the samples outweights the improvement we would have got from working with more samples

- Therefore, thinning is

optional

Where is the Markov Chain?

The states are the samples, with the initial state the initial assignment of values

The transition matrix is implicitly determined by the conditional by the conditional distributions and the process involved in generating a new sample/state

Intervention Analysis using Causal Networks

We intervene in a system when we force a variable to take a particular value.

Note this it not the same as estimating the effect of finding that the variable takes a value, since the latter tells us information about the causes of that variable.

If C is found to take a value, this tells us something not only about D and E but also about A.

But if we force C to take a value, this tells us nothing about A

Therefore, we can estimate the effects of intervention by removing all edges into forced variables, an then performing inference as usual.

소중한 공감 감사합니다