5. Markov Chains

- -

Markov Chains

Markov Chains appear all over computer science, mathematics and AI. Just some of the applications

- Biology : birth death process, disease spreading

- Biology : DNA/RNA/Protein sequence analysis

- Speech recognition

- Control theory, filtering

Markov chain과 Markov process와의 차이점이 무엇인가?

일반적으로 Markov property를 만족하는 것을 Markov process라고 하고, 이 중 discrete한 경우를 Markov chain이라고 많이 부른다. 사실 2개의 용어를 크게 섞어써도 무방하기는 하다.

Assumption

- The system is in one of a number of states : Si

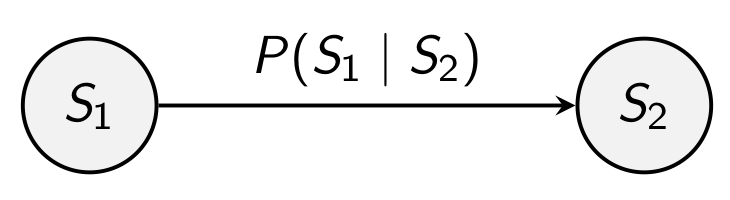

- For each pair of states Si and Sj, we have some probability pi,j=P(Si∣Sj) of going from state Si to Sj

- The probability only depends on

a finite number of previous states

예를 들어 날씨 예보 모델에서 하루하루의 날씨를 Markov chain으로 모델링한다면, '오늘'의 날씨는 '어제'의 날씨에만 의존하고 '그제'나 그 이전의 날씨는 고려하지 않는 것이다.

이러한 성질을 Markov property 혹은 memoryless 라고 불린다.

따라서 우리는 system을 sequence of state 로 기술할 수 있게 된다.

state 를 정의하는 것이 굉장히 중요하다. 즉 주어진 문제나 데이터에 가장 적합한 방식으로 state를 잘 정의하는 것은 Markov Chain 모델링에서 중요한 과제이다.Questions you can ask of Markov Models

- Given probabilities what is the most likely sequence of states?

- If I let the system run for a long time, what is the probability distribution of the states that I will end up in? What is the most likely state I will end up in?

- Given a sequence of states, how likely is that sequence based on our model?

- Can I estimate transition probabilities from the data?

- Divide states into observable and hidden states. Given a sequence of observed states, what is the

most likely sequence of hidden statesthat give you the observed states?💡이를 hidden Markov model이라고 한다.

Example - Rain or no Rain

- Two states S=0 sunny, and R=1 raining

- Initial probability distributionS0=(0.5,0.5)

50% chance of sunny, 50% chance of rain.

- We need four numbers p(S∣R),p(S∣S),p(R∣R),p(R∣S)[p(S∣S)p(S∣R)p(R∣S)p(R∣R)]=[0.80.40.20.6]💡

transition probability를 matrix 형태로 표현한 것이다. 행렬 형태로 표현하면 수학적으로 계산을 좀 더 간편하게 할 수 있다. (이러한 행렬을transition matrix라고 한다.)💡앞에서 언급한 것처럼 system 자체를 state들의 sequence로 바라보겠다는 것인데, 해당 state들의 시간의 따른 변화에 대한 distribution 정보를 담고 있는 행렬이라고 생각해주면 된다.

After one day, what is the probability that it is sunny?

After one day, what is the probability that it is rainy?

Therefore, we can interpret this result as

General Setting

Given n states (1,…,n), we have a n by n matrix T

Given the initial distribution of states S0 then after n steps we end up in the distribution:

homogeneous Markov chain 이라고 하고, 변하는 경우에는 non-homogeneous Markov chain이라고 부른다.stationary distribution 으로 간다는 보장을 반드시 할 수 있는 것은 아니다. Convergence 여부는 따로 수학적으로 증명해주어야 한다.Sampling from a Markov Chain

Useful for simulation

- Sample the initial state, randomly pick a state with the required probabilities

- Calculate the new state probabilities and sample again with the required probabilities

Monte Carlo Markov Chain (MCMC)라고 부른다. 이를 통해서 복잡한 확률 분포로부터 샘플을 추출할 수 있게 된다. 충분한 시간 동안 실행된 후 생성된 sample들은 원하는 target distribution으로부터 추출된 것처럼 간주할 수 있다.Example

Two states A and B with probability distribution

and transition matrix

Sampling means that you pick A or B you have a 65% chance of picking A

Suppose you pick A, this gives that state vector

Calculate

Then sample state A and B with probabilities 0.3 and 0.7

Hidden Markov Models

이전까지의 Markov model의 경우에는 observe 할 수 있는 state만을 다루었다. 반면 Hidden Markov Model의 경우에는 관찰할 수 없는 state들이 Markov property를 가지고 이러한 state가 observation에 영향을 주는 system을 다룬다.

state 가 Markov property를 가지는 것이다.Applications

- Speech recognition : You observe the sound (observation), and the hidden state is what word is being spoken

- Error correcting codes : You observe a noisy bit-stream (observation), and you want to infer the sent message (hidden state)

- Language processing : Given a sentence “The band goes whoop” work out the probability that “whoop” is an adverb or a noun (find hidden state) from it place in the sentence (observation)

- Biological sequence analysis : Genetic sequence have lots of codes, start and stop sequences, protein codes, etc. You observe the raw sequence AGTAGAATAA (observation) and you want to know what it is doing (hidden state).

Example

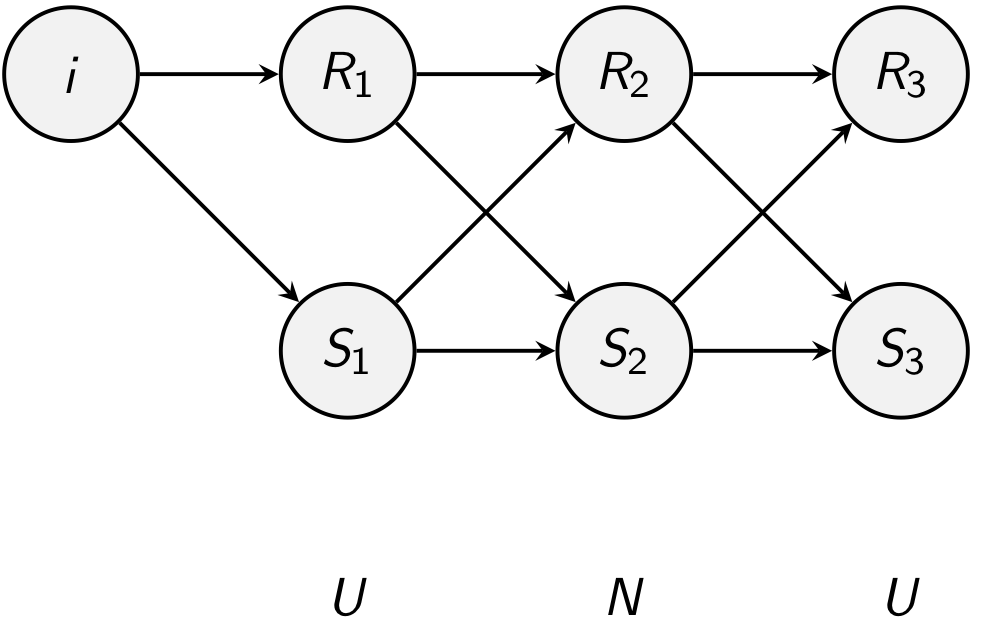

Divide the world up into states (Raining, R=0, and Sunny S=1) and observations (if my mother takes my Umbrella, U=1, and not takes my Umbrella, N=0)

As before, initial probability distribution is

Again we have a transition matrix for the states:

Unlike before we need to know the probabilities that an umbrella will be used or not

current hidden state에만 의존한다.Suppose I observe for three days and see U,N,U. What is the probability of this sequence?

If I knew that the hidden states on those three days were R,S,R then I can use the conditional probabilities

For three days there are 8 possible sequence of internal hidden states

But you have to note that each term are not equally likely. So we have to weight each item by multiplying the probability that observation occurs

But this approach is impractical. So we are going to use dynamic programming instead

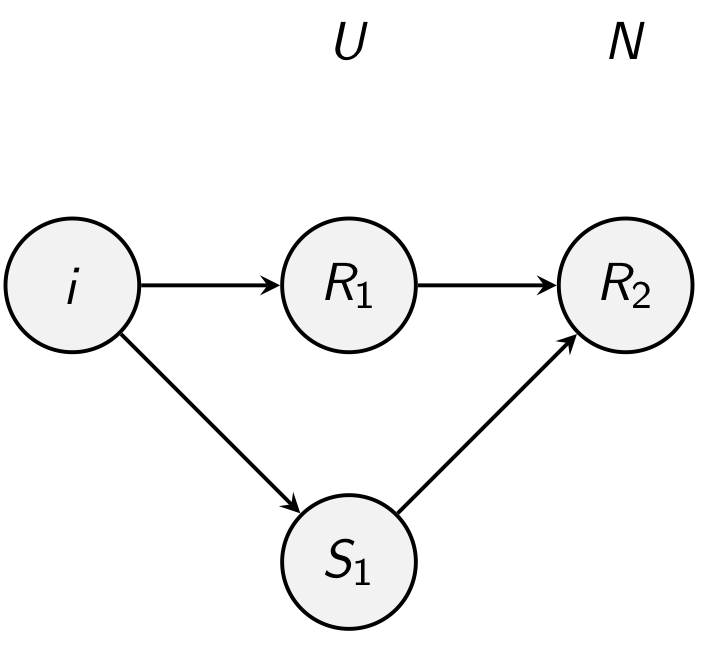

By Bayes’ theorem

But we have the same factor p(U) in each term. So we just calculate the numerator and scale it.

Then how do we calculate p(R2∣UN). The Markov assumption is that the current state only depends on the previous state. We can get R2 from R1 or S1, and we have already calculated those probabilities

Since, U is only related to R2

In addition

Therefore,

Also,

이를 행렬식으로 표현하면 다음과 같다

where ∗ is element-wise product

Smoothing

Given that I know what state I’m in and I have an observation what is the probability of the previous states. Different from the forward calculation, we know what state we are in.

→ This can be solved with a combination of forward and backward reasoning.

Viterbi’s Algorithm

Given a sequence of observations, what is the most likely sequence of hidden states that give that sequence

→ 즉, 주어진 observation들을 잘 표상하는 hidden state를 찾는 것이 목표이다.

Basic idea

- We are calculating the most probable path from S1 to Sn that passes through Sk

- Then it is concatenation of the most probable path from S1 to Sk and the most probable path from Sk to Sn

→ 즉, Sk 전후로 가장 probable한 path를 찾은 뒤에 concatenation하겠다는 전략이다.

소중한 공감 감사합니다