6. Approximation and fitting

- -

Norm approximation

Basic norm approximation problem

Assume that the columns of A are independent.

where A∈Rm×n with m≥n, ∥⋅∥ is a norm of Rm

Geometric interpretation

Geometrically, the solution x∗ is the point such that Ax∗∈R(A) that closest to b. The vector

is called the residual for the problem; its components are sometimes called the individual residuals associated with x.

Estimation interpretation

It can be interpreted as a problem of estimating a parameter vector based on an imperfect linear vector measurement. We consider a linear measurement model

where y∈Rm is a vector measurement, x∈Rn is a vector of parameters to be estimated, and v∈Rm is some unknown measurement error, presumed to be small in the norm ∥⋅∥. The estimation problem is to make a sensible guess as to what x is for given y.

If we guess that x has the value x^, the most plausible guess for x is

Example

- least squares approximation (∥⋅∥2)

The most common norm approximation problem involves the Euclidean or l2-norm. By squaring the objective, we obtain an equivalent problem which is called the least-squares approximation problem

minimize ∥Ax−b∥22=r12+⋯+rm2where the objective is the sum of squares of the residuals. It can be solvable by normal equations

ATAx=ATbIf A has a full rank (i.e. the rows of A are independent), the least-squares approximation problem has a unique solution

x=(ATA)−1ATb

- Chebyshev approximation (∥⋅∥∞)

When the l∞-norm is used, the norm approximation problem

minimize ∥Ax−b∥∞=max{∣r1∣,…,∣rm∣}is called the

Chebyshev approximation problemorminimax approximation problemsince we are to minimize the maximum absolute value of residuals. The Chebyshev approximation problem can be solved as an LPminimize tsubject to

−t1⪯Ax−b⪯t1with variables x∈Rn and t∈R

- Sum of absolute residuals approximation (∥⋅∥1)

When the l1-norm is used, the norm approximation problem

minimize ∥Ax−b∥1=∣r1∣+⋯+∣rm∣is called the

sum of absolute residuals approximation problem, or in the context of estimation, it is called arobust estimator. Like the Chebyshev approximation problem, this problem can be expressed as an LPminimize 1Ttsubject to

−t⪯Ax−b⪯twith variables x∈Rn and t∈R

Penalty function approximation

In lp-norm approximation, for 1≤p<∞, the objective is

As in least-squares problems, we can consider the equivalent problem with objective

which is a separable and symmetric function of the residuals. In particular, the objective only depends on the amplitude distribution of the residuals (i.e. the residuals in sorted order)

We will consider a useful generalization of the lp-norm approximation problem that only depends on the amplitude distribution of the residuals.

The penalty function approximation problem has the form

subject to

where ϕ:R→R is called the residual penalty function.

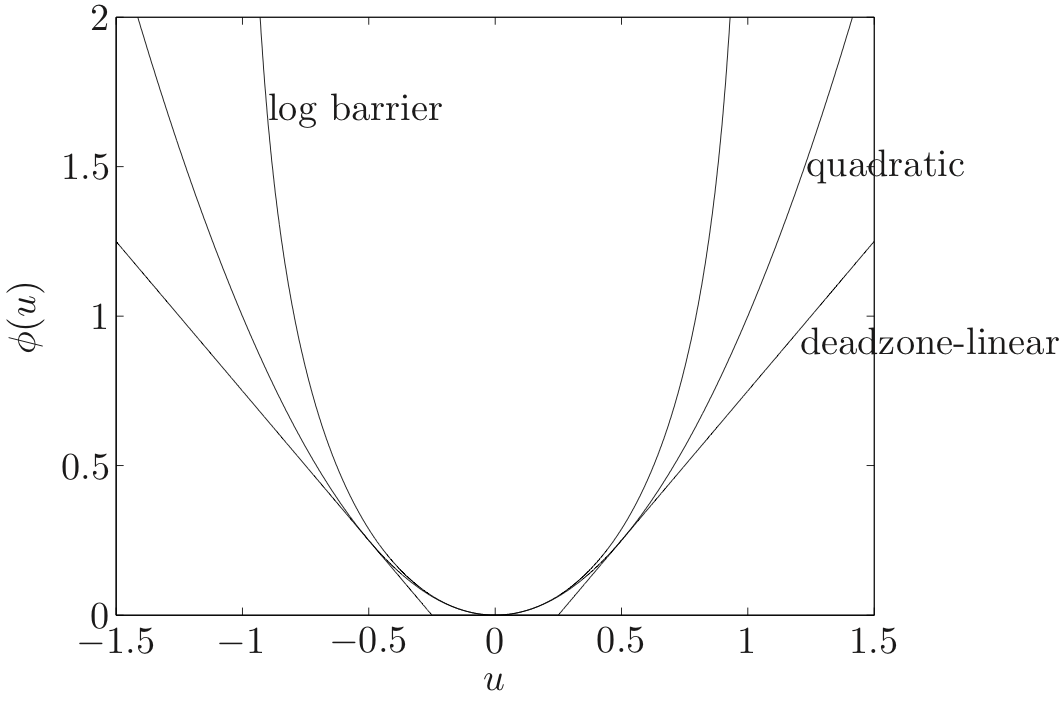

Examples

- quadraticϕ(u)=u2💡It is the most typical penalty function because we already know the analytical solution of it.💡Unlike the L1 norm, the penalty function value increases faster as the residual grows larger.

- deadzone linear with width aϕ(u)=max{0, |u|-a}💡Neglect the residual if its values is less than a

- log-barrier with limit aϕ(u)={−a2log(1−(u/a)2∞∣u∣<aotherwise💡It goes to infinity if a residual located in the outside of the limit.💡If the residual is small, the penalty function behaves similarly to a quadratic function. However, if the residual is large, its value will increase much more rapidly than that of a quadratic function.

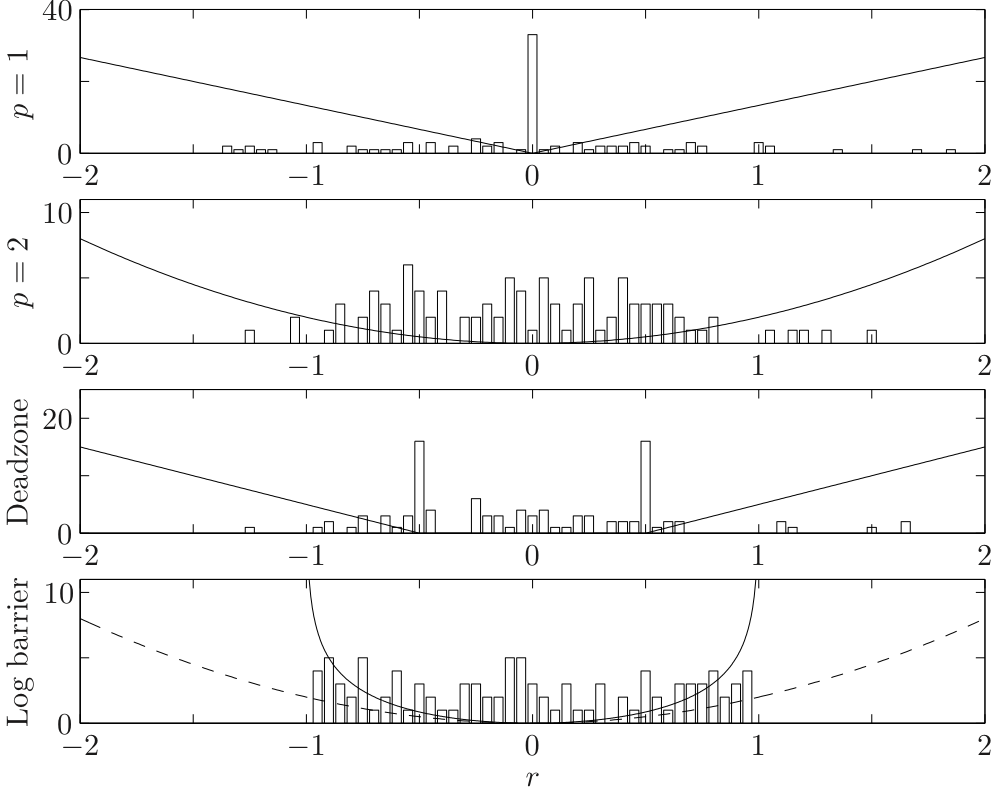

We take a matrix A∈R100×30 and vector b∈R100, and compute the l1-norm and l2-norm approximate solutions of Ax≈b, as well as the penalty function approximations with a dead zone linear penalty with a=0.5 and log barrier penalty with a=1. The following figure shows the four associated penalty functions and the amplitude distributions of the optimal residuals for these four penalty approximations.

Several features can be derived from the amplitude distributions

- For the l1-optimal solution, many residuals are either zero or very small. The l1-optimal solution also has relatively larger residuals than the others.💡Compared to l2-norm, this converges to zero for relatively small residuals due to the higher penalty imposed when the residuals are small.

- The l2-norm approximation has many modest residuals, and relatively few larger ones.💡When the residual is small, the value of the penalty value is already small enough so that we can quit the procedure.

- For the dead zone linear penalty, we see that many residuals have the value ±0.5, right at the edge of the free zone.

- For the log barrier penalty, we see that no residuals have a magnitude larger than 1, but otherwise the residual distribution is similar to the residual distribution for l2-norm approximation.

Penalty function approximation with sensitivity to outliers

In the estimation or regression context, an outlier is a measurement yi=aiTx+vi for which the noise vi is relatively large. This is often associated with faculty data or a flawed measurement. When outliers occur, any estimate of x will be associated with a residual vector with some large components.

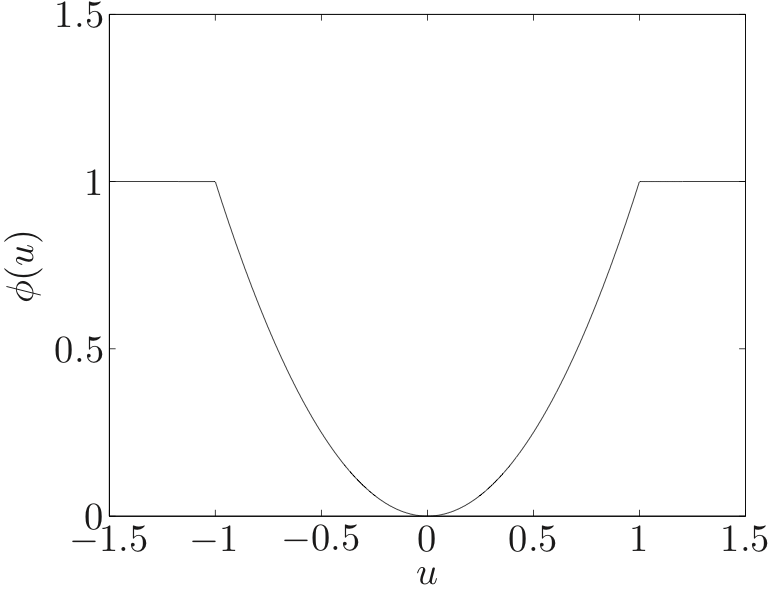

Ideally, we would like to guess which measurements are outliers, and either remove them from the estimation process or greatly lower their weight in forming the estimate. This could be accomplished using penalty function approximation such as

This penalty function agrees with least-squares for any residual smaller than M, but puts a fixed weight on any residual larger than M, no matter how much larger it is.

The problem is that, like we can see above, it is not a convex function. The sensitivity of a penalty function depends on the value of the penalty function for large residuals. If we restrict ourselves to convex penalty functions, the ones that are least sensitive are those for which ϕ(u) grows linearly (like l1-norm).

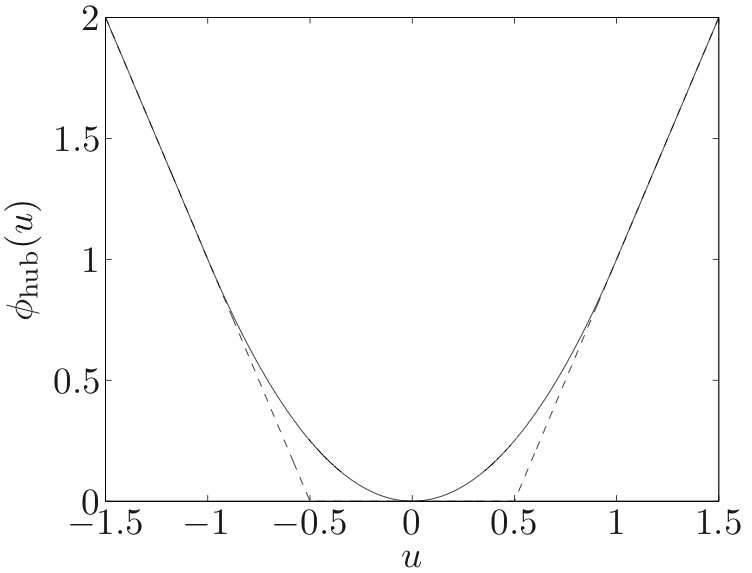

robust, since the associated penalty function approximation methods are much less sensitive to outliers than least-squares.One obvious example of a robust penalty function is ϕ(u)=∣u∣. Another example is the robust least-squares or Huber penalty function given by

robust estimation or robust regressionLeast norm problems

The basic least-norm problem has the form

subject to

where the data are A∈Rm×n with m≤n and b∈Rm, the variable is x∈Rn, and ∥⋅∥ is a norm on Rn. Assume that the rows of A are independent.

- Geometric interpretation

x∗ is a point in affine set {x∣Ax=b} with minimum distance to 0

- Estimation interpretation

b=Ax are perfect measurement of x. x∗ is the smallest estimate consistent with measurements.

💡Assume we don’t have enough measurement to identify a parameter perfectly (the nullity is not zero), but measurements are perfect.

Example

- Least-squares solution of linear equations (∥⋅∥2)

By squaring the objective we obtain the equivalent problem

minimize ∥x∥22subject to

Ax=bLike the least-squares approximation problem, this problem can be solved analytically. By introducing the dual variable ν∈Rm, the optimality conditions are

2x∗+ATν∗=0Ax∗=bThen

ν∗=−2(AAT)−1bx∗=AT(AAT)−1b💡Since rank A=m<n, the matrix AAT is invertible

- Sparse solutions via least l1-norm

l1-norm approximation gives relatively large weight to small residuals so that it produces a solution x with a large number of components equal to zero.

💡l1-norm problem tens to producesparsesolutions of Ax=b

- Least penalty problemminimize ϕ(x1)+⋯+ϕ(xn)

subject to

Ax=bwhere ϕ:R→R is a convex penalty function.

Regularized approximation

The goal is to find a vector x that is small, and also makes the residual Ax−b small. This is naturally described as a convex vector optimization problem with two objectives.

where A∈Rm×n and norms on Rm and Rn can be different.

Regularization

Regularization is a common scalarization method used to solve the bi-criterion problem. One form of regularization is to minimize the weighted sum of the objectives

where γ>0 is a problem parameter.

Another common method of regularization is to minimize the weighted sum of squared norms

The most common form of regularization is

It is called Tikhonov regularization. It has the analytical solution

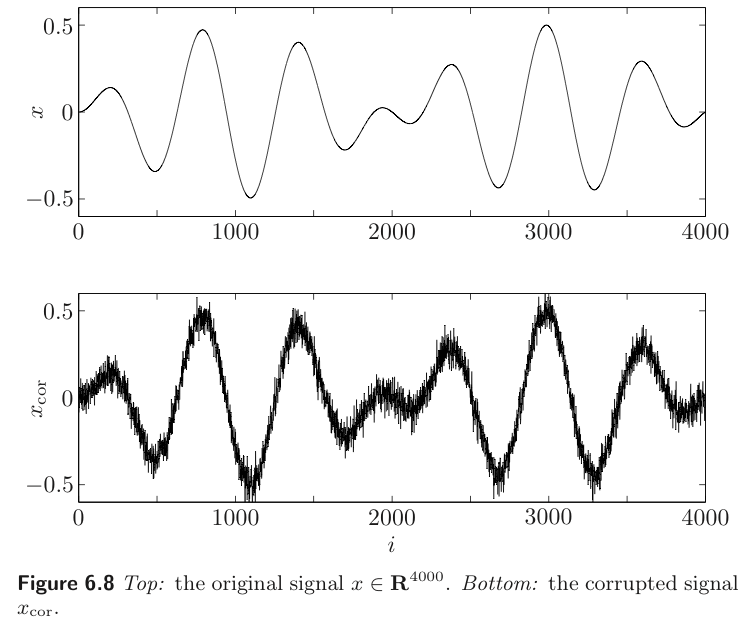

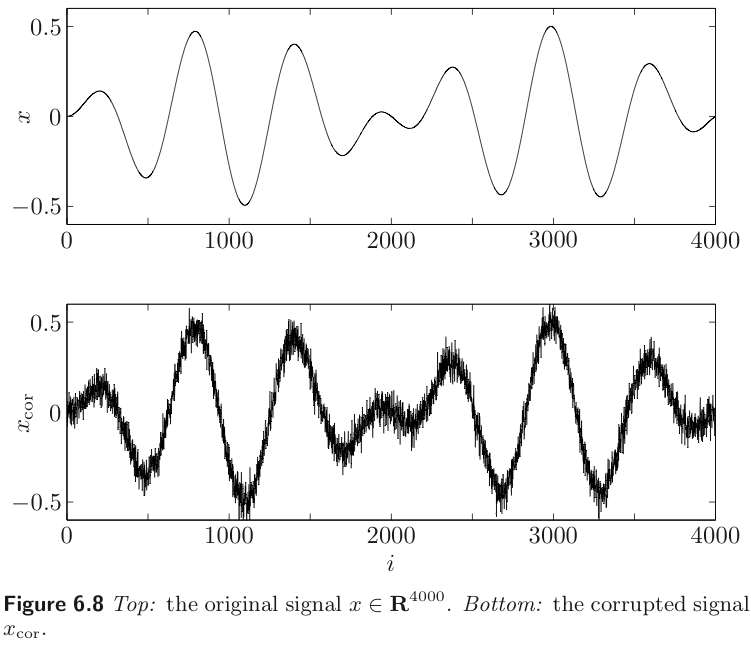

Signal reconstruction

In reconstruction problems, we start with a signal represented by a vector x∈Rn. The coefficients xi correspond to the value of some function of time, evaluated at evenly spaced points.

The signal x is corrupted by an additive noise v

The goal is to form an estimate x^ of the original signal x, given the corrupted signal xcor. This process is called signal reconstruction.

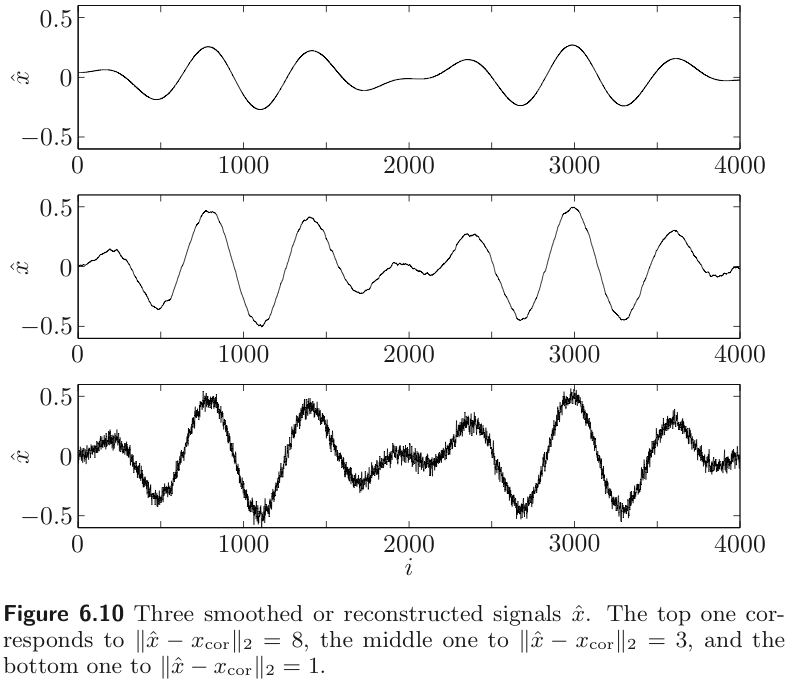

smoothingOne simple formulation of the reconstruction problem is the bi-criterion problem

where ϕ:Rn→R is convex, and it is called regularization function or smoothing objective.

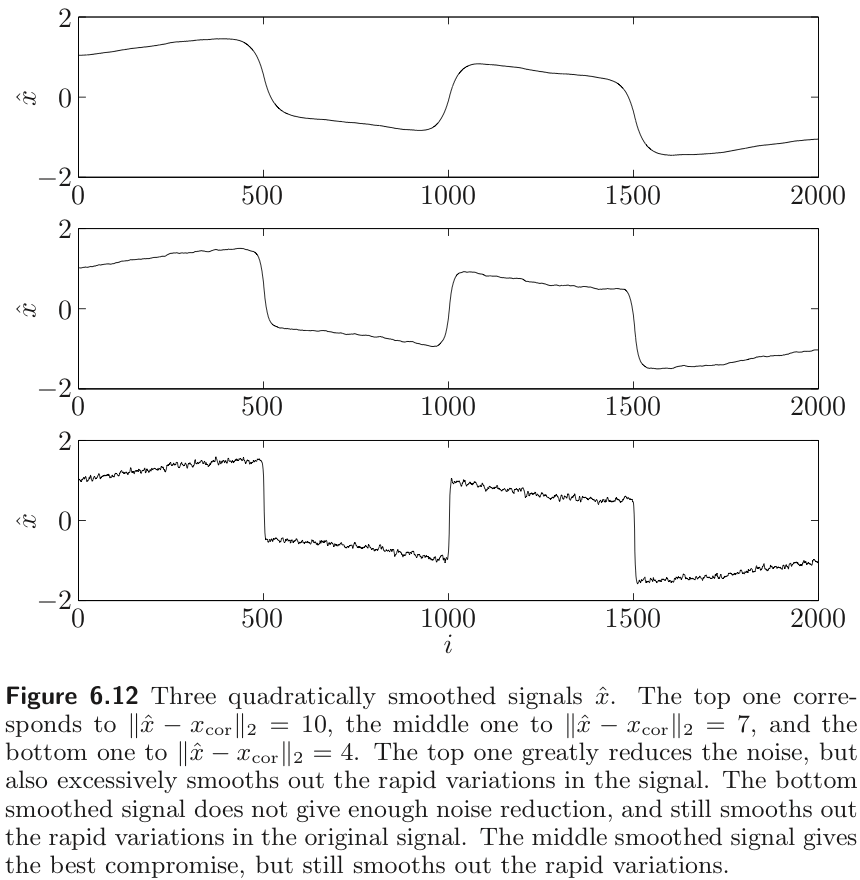

Example : Quadratic smoothing

The simplest reconstruction method uses the quadratic smoothing function

where D∈R(n−1)×n is the bidiagonal matrix.

We can obtain the optimal trade-off between ∥x^−xcor∥2 and ∥Dx^∥2 by minimizing

where δ>0 parameters the optimal trade-off curve.

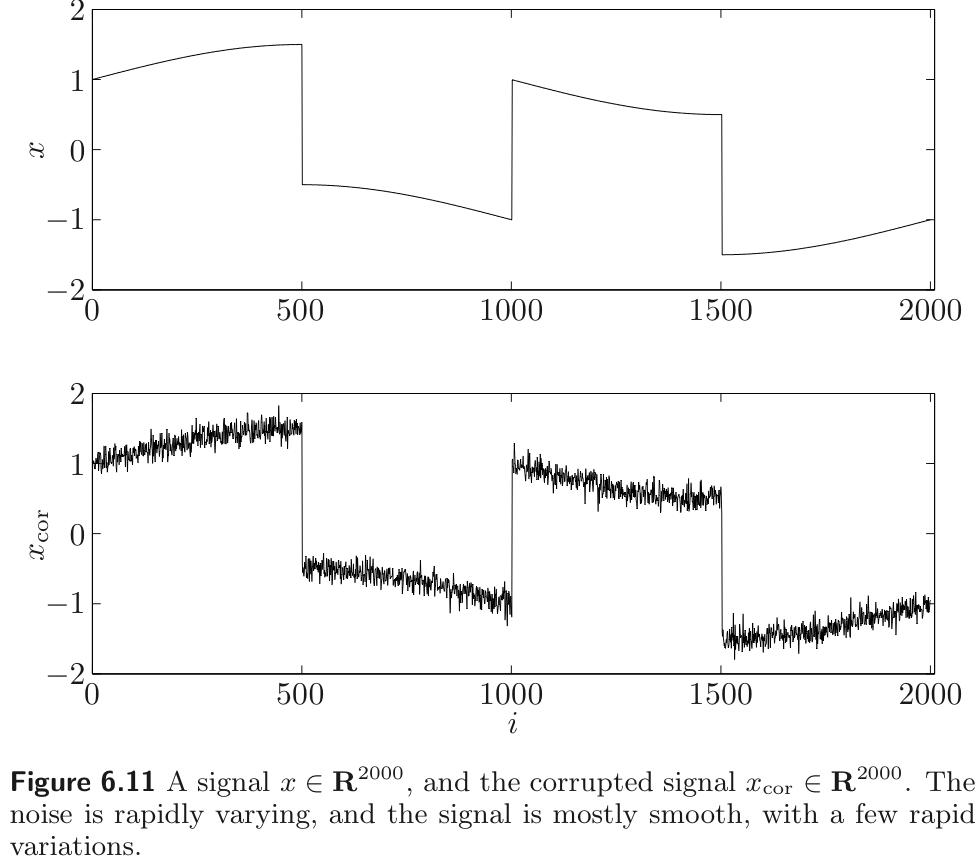

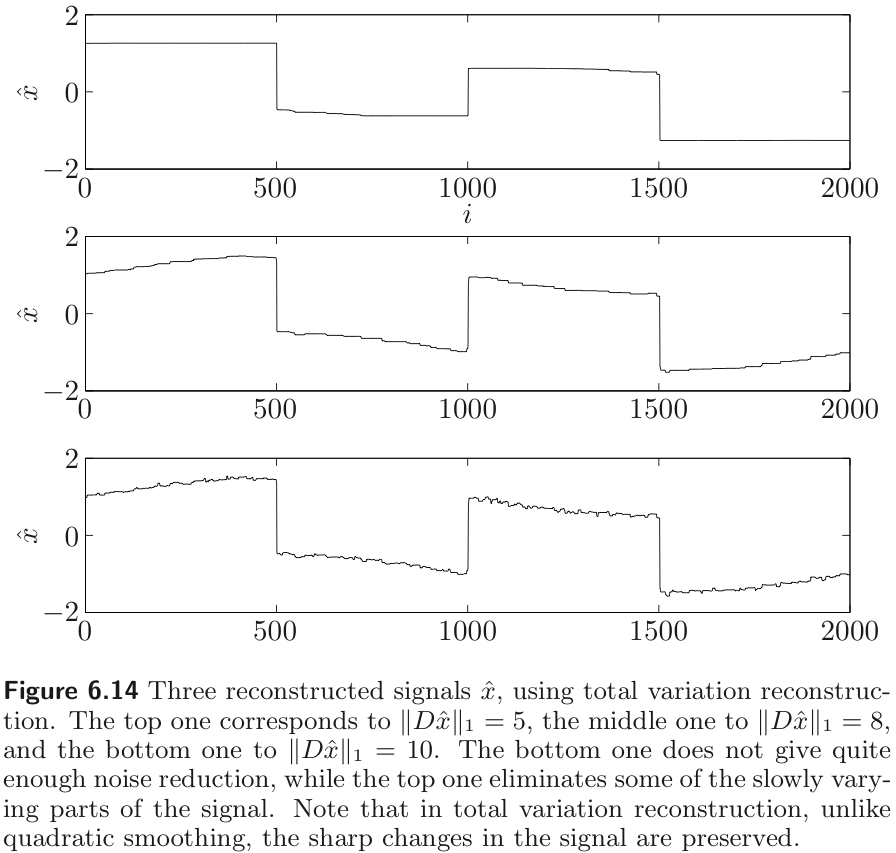

Example : Total variation reconstruction

Simple quadratic smoothing works well as a reconstruction method when the original signal is very smooth, and the noise is rapidly varying. But any rapid variations in the original signal will be removed by quadratic smoothing.

Total variation reconstruction method can remove much of the noise, while still preserving rapid variations in the original signal. The method is based on the smoothing funciton

Therefore, we have to choose the smoothing objective carefully regarding of the characteristic of the noise and signal.

Robust approximation

We consider an approximation problem with basic objective ∥Ax−b∥, but also wish to take into account some uncertainty or possible variation in the data matrix A. There are two approches to solve this problem.

- stochastic : assume A is random and minimize E(∥Ax−b∥)

- worst-case : set A of possible values of A and minimize supA∈A∥Ax−b∥

Stochastic robust approximation

We assume that A is a random variable taking values in Rm×n, with mean Aˉ. Then

where U is a random matrix with zero mean.

It is natural to use the expected value of ∥Ax−b∥ as the objective

We refer to this problem as the stochastic robust approximation problem.

Worst-case robust approximation

It is also possible to model the variation in the matrix A using a worst case approach. We describe the uncertainty by a set of possible values for A

which we assume is non-empty and bounded. We define the associated worst-case error of a candidate approximate solution x∈Rn as

which is always a convex function of x.

The worst-case robust approximation problem is to minimize the worst case error

where the variable is x, and the problem data are b and the set A.

It is always a convex optimization problem, but its tractability depends on the norm used and the description of uncertainty of A.

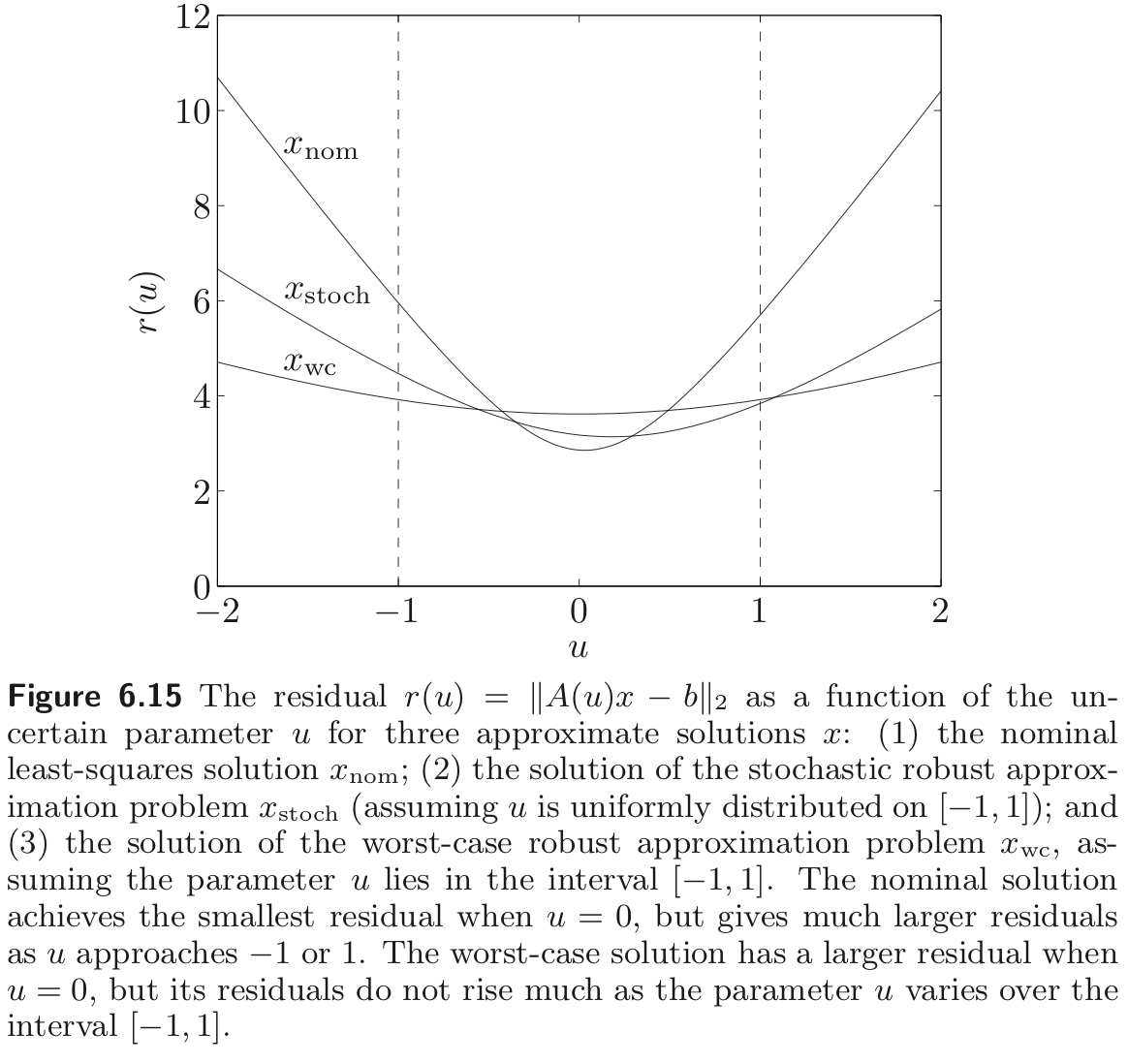

Example

To illustrate the difference between the stochastic and worst-case formulations for the robust approximation problem, we consider the least squares problem

where u∈R is an uncertain parameter and A(u)=A0+uA1. We consider a specific instance of the problem, with A(u)∈R20×10, ∥A0∥=10,∥A1∥=1, and u in the interval [−1,1].

We find three approximate solutions

- Nominal optimal : The optimal solution xnom is found, which minimize ∥A0x−b∥22

- Stochastic robust approximation : We find xstoch, which minimizes E(∥A(u)x−b∥22, assuming the parameter u is uniformly distributed on [−1,1]

- Worst-case robust approximation. We find xwc, which minimizes−1≤u≤1sup∥A(u)x−b∥2

Example : stochastic robust Least squares

Consider the stochastic robust least-squares problem

where A=Aˉ+U, U is a random matrix with zero mean.

We can express the objective as

where P=E(UTU)

Therefore, the stochastic robust approximation problem has the form of regularized least-squares problem

with solution

When the matrix A is subject to variation, the vector Ax will have more variation the laarger x is, and Jensen’s inequality tells us that variation in Ax will increase the average value of ∥Ax−b∥2. So we need to balance making Aˉx−b small with the desire for a small x to keep the variation in Ax small.

For P=δI, we can get Tikhonov regularized least squares problem

Example : worst case robust least squares

Let

Consider the worst case robust least-squares problem

where P(x)=[A1xA2x⋯Apx],q(x)=Aˉx−b

Note that the strong duality holds between the following problems

- Primal problemmaximize ∥Pu+q∥22

subject to

∥u∥22≤1💡Intuitively, we can solve this problem by finding a maximum singular value.

- Dual problemminimize t+λ

subject to

IPTqTPλI0q0t⪰0

Therefore, the Lagrange dual of this problem can be expressed as the SDP

subject to

with variables t,λ∈R.

For fixed x, we can compute sup∥u∥2≤1∥P(x)u+q(x)∥22 by solving the SDP with variables t and λ. In other words, optimizing jointly over t,λ, and x is equivalent to minimizing worst case error ewc(x)2.

소중한 공감 감사합니다