3. Convex function

- -

Convex function

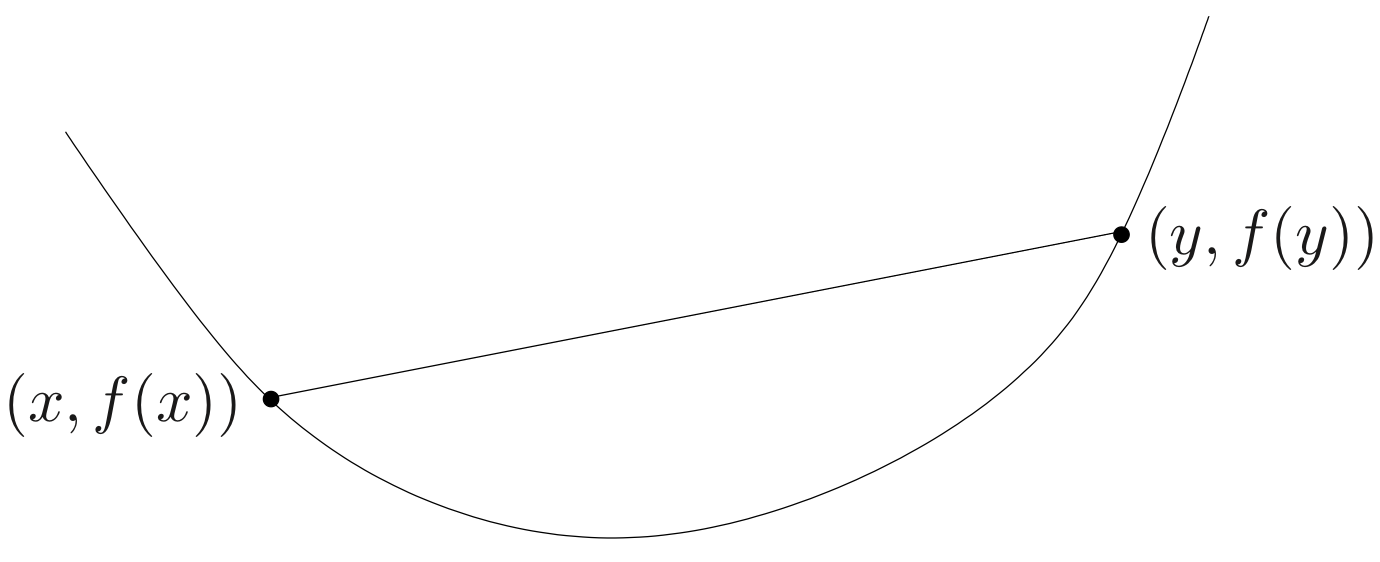

f:Rn→R is convex if dom f is a convex set and

for all x,y∈dom f,0≤θ≤1

f is strictly convex if dom f is convex and

for all x,y∈dom f,0≤θ≤1

Examples

affine functions are convex and concave; all norms are convex

Examples on R

- affine : ax+b on R, for any a,b∈R

- exponential : eax, for any a∈R

- powers : xα on R++, for α≥1 or α≤0

- powers of absolute value : ∣x∣p on R, for p≥1

- negative entropy : −xlogx on R++

Examples on Rn

- affine function : f(x)=aTx+b

- norms : ∥x∥p=(∑i=1n∣xi∣p)1/p for p≥1; ∥x∥∞=maxk∣xk∣

Proof

∥θx+(1−θ)y∥≤∥θx∥+∥(1−θ)y∥≤θ∥x∥+(1−θ)∥y∥

Examples on Rm×n

- affine functionf(X)=tr(ATX)+b=i=1∑mj=1∑nAijXij+b💡Actually, tr(ATB)=⟨A,B⟩F is a generalized inner-product of two matricesFrobenius inner productIn mathematics, the Frobenius inner product is a binary operation that takes two matrices and returns a scalar. It is often denoted ⟨ A , B ⟩ F {\displaystyle \langle \mathbf {A} ,\mathbf {B} \rangle _{\mathrm {F} }} . The operation is a component-wise inner product of two matrices as though they are vectors, and satisfies the axioms for an inner product. The two matrices must have the same dimension - same number of rows and columns, but are not restricted to be square matrices.

https://en.wikipedia.org/wiki/Frobenius_inner_product

https://en.wikipedia.org/wiki/Frobenius_inner_product

- spectral (maximum singular value) normf(X)=∥X∥2=σmax(X)=(λmax(XTX))1/2💡The spectral norm of a matrix A is the largest singular value of A. It is a special case of p-norm when p is 2.Matrix normIn mathematics, we can define norms for the elements of a vector space. When the vector space in question consists of matrices, these are called matrix norms.

https://en.wikipedia.org/wiki/Matrix_norm

https://en.wikipedia.org/wiki/Matrix_norm

Restriction of a convex function to a line

f:Rn→R is convex if and only if the function g:R→R

is convex in t for any x∈dom f,v∈Rn

We can check convexity of f by checking convexity of functions of one variable

Example

f:Sn→R with f(X)=logdetX,dom f=S++n

where λi are the eigenvalues of X−1/2VX−1/2

g is concave in t (for any choice of X≻0,V); hence f is concave.

Note

Extended-value extension

It is often convenient to extend a convex function to all of Rn by defining its value to be ∞ outside its domain. If f is convex we define its extended-value extension f~ of f is

The extension can simplify notation, since we don’t need to explicitly describe the domain.

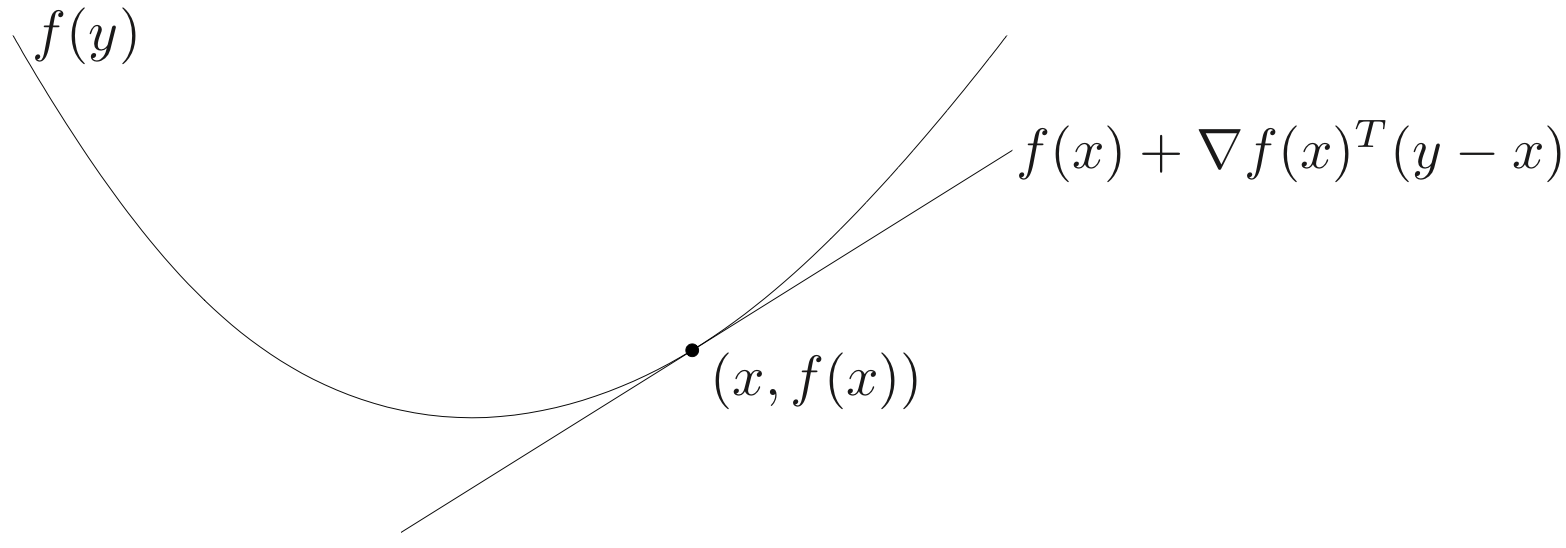

First-order condition

f is differentiable if dom f is open and the gradient

exists at each x∈dom f

1st-order condition : differentiable f with convex domain is convex if and only if

Second-order conditions

f is twice differentiable if dom f is open and the Hessian ∇2f(x)∈Sn,

or equivalently

where Hx is a hessian matrix at x.

2nd-order condition : for twice differentiable f with convex domain

- f is convex if and only if∇2f(x)⪰0,∀x∈dom f

- if ∇2f(x)≻0,∀x∈dom f, then f is strictly convex.

Example

- Quadratic function : f(x)=21xTPx+qTx+r (with P∈Sn)∇f(x)=Px+q,∇2f(x)=P

convex if P⪰0

- Least-squares objective : f(x)=∥Ax−b∥22∇f(x)=2AT(Ax−b),∇2f(x)=2ATA

convex for any A since ATA is always positive semi-definite.

- quadratic-over-linear : f(x,y)=x2/y∇2f(x,y)=y32[y−x][y−x]T⪰0

convex for y>0

- log-sum-exp : f(x)=log∑k=1nexpxk∇f(x)=(expx1/k=1∑nexpxk,expx2/k=1∑nexpxk,⋯,expxn/k=1∑nexpxk)T

Then,

∇ii2f(x)=expxi/k=1∑nexpxk+expxi∗−(∑k=1nexpxk)2expxi∇ij2f(x)=expxi∗−(∑k=1nexpxk)2expxj(i=j)Therefore,

∇2f(x)=1Tz1diag(z)−(1Tz)21zzT(zk=expxk)to show ∇2f(x) is positive semi-definite, we must verify that vT∇2f(x)v≥0,∀v

vT∇2f(x)v=(∑kzk)2(∑kzkvk2)(∑kzk)−(∑kvkzk)2By Cauchy-Schwarz inequality,

(k∑vkzk)2≤(k∑zkvk2)(k∑zk)So, vT∇2f(x)v≥0,∀v.

Therefore, ∇2f(x) is a positive semi-definite matrix.

💡This function is a smooth approximation of a maximum since the maximum number nominate the value. In deep learning or machine learning perspective, we call this function as a softmax function.

- geometric mean : f(x)=(∏k=1nxk)1/n on R++n is concave

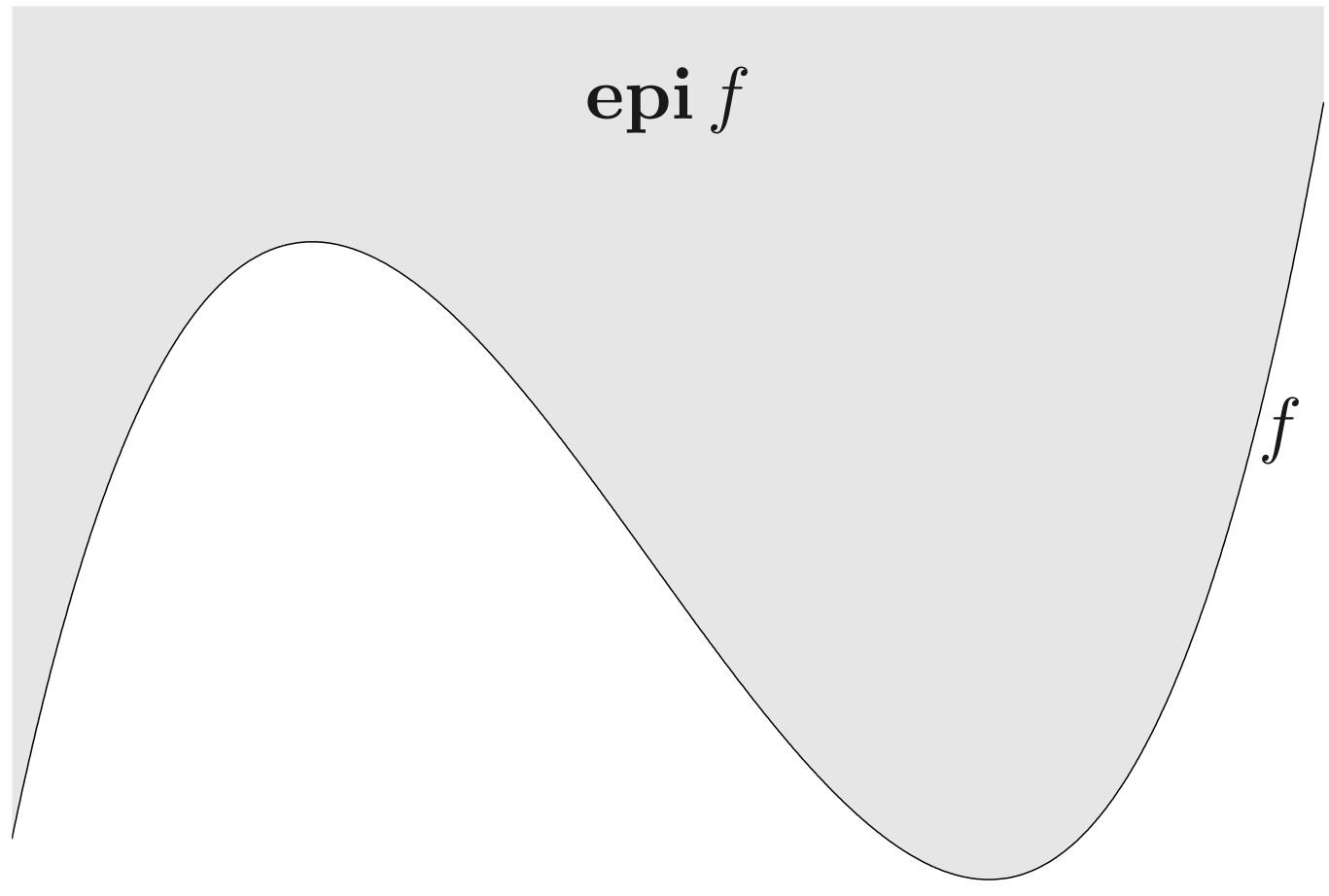

Epigraph and sublevel set

α-sublevel set of f:Rn→R:

sublevel sets of convex functions are convex

epigraph of f:Rn→R

Jensen’s inequality

basic inequality : if f is convex, then for 0≤θ≤1

extension : if f is convex, then

for any random variable z

Operations that preserve convexity

practical methods for establishing convexity of a function

- by using definition

- for twice differentiable functions, show ∇2f(x)⪰0,∀x

- show that f is obtained from simple convex functions by operations that preserve convexity

- nonnegative weighted sum

- composition with affine function

- point-wise maximum and supremum

- minimization

- composition with scalar function

- vector composition

- perspective

Composition with affine function

is convex if f is convex

Proof

Given x,y∈dom A,θ≥0

f(θ(Ax+b)+(1−θ)(Ay+b))=f(A(θx+(1−θ)y)+b)≤θf(Ax+b)+(1−θ)f(Ay+b)Therefore f(Ax+b) is a convex function.

Examples

- log barrier for linear inequalitiesf(x)=−i=1∑mlog(bi−aiTx)

where dom f={x∣aiTx<bi,i=1,…,m}

💡Since log is convex and it is composited by the affine function, it is also a convex function

- any norm of affine functionf(x)=∥Ax+b∥💡We already know that every norm is a convex function

Pointwise maximum

if f1,…,fm are convex, then f(x)=max{f1(x),…,fm(x)} is convex

Proof

Let x,y∈∩i=1mdom fi,θ≥0

Since fi is a convex function,

fi(θx+(1−θ)y)≤θfi(x)+(1−θ)fi(y)for all i=1,…,m

Without loss of generality, let f(θx+(1−θ)y)=fk(θx+(1−θ)y)

Then,

f(θx+(1−θ)y)=fk(θx+(1−θ)y)≤θfk(x)+(1−θ)fk(y)≤θf(x)+(1−θ)f(y)Therefore, f is a convex function.

Examples

- point-wise linear functionf(x)=i=1,…,mmaxaiTx+bi

is a convex function

- sum of r largest components of x∈Rnf(x)=x[1]+⋯+x[r]

where x[i] is i’th largest component of x

is a convex function

Actually,

f(x)=max{xi1+xi2+⋯+xir∣1≤i1<i2<⋯ir≤n}

Pointwise supremum

if f(x,y) is convex in x for each y∈A, then

is convex

Proof

Let x1,x2∈dom g,y∈A,θ≥0

g(θx1+(1−θ)x2)=y∈Asupf(θx1+(1−θ)x2,y)Since f is convex in x for each y∈A,

y∈Asupf(θx1+(1−θ)x2,y)≤y∈Asup[θf(x1,y)+(1−θ)f(x2,y)]≤y∈Asupθf(x1,y)+y∈Asup(1−θ)f(x2,y)≤θg(x1)+(1−θ)g(x2)Therefore, g is a convex set.

Actually, we use the property of supremum

xsup(f(x)+g(x))≤xsupf(x)+xsupg(x)Let supf(x)=α,supg(x)=β

Since α≥f(x1) for all x1∈dom f and β≥f(x2) for all x2∈dom g, α+β≥f(x)+g(x) for all x∈dom f+g

So, α+β is a upper bound of f+g

Therefore, supx(f(x)+g(x))≤supxf(x)+supxg(x)

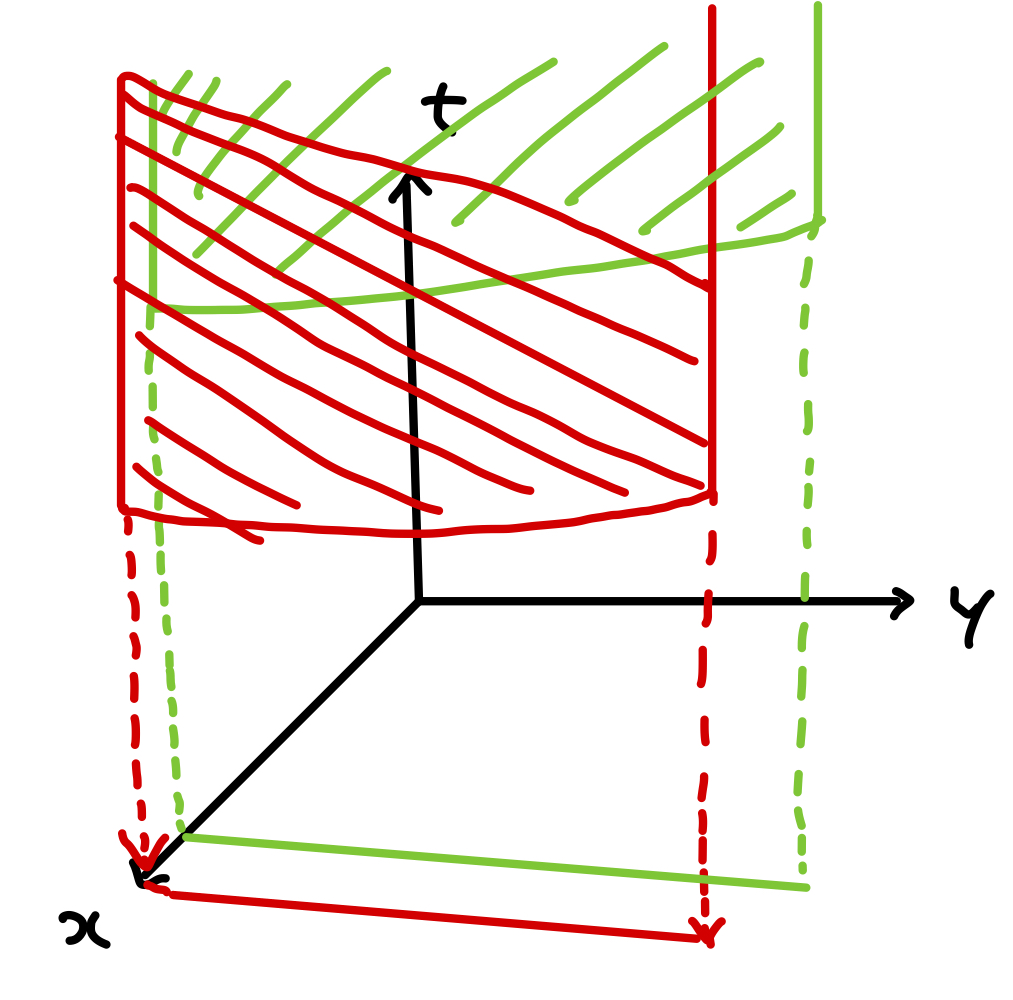

Epigraph interpretation

In terms of epigraphs, the point-wise supremum of functions corresponds to the intersection of epigraphs: with f,g. we have

Thus, the result follows from the fact that the intersection of a family of convex set is convex.

Red and green areas represent the epigraph of f(x,y) for a fixed x. As f is convex in x, both the red and green areas are convex sets. Our objective is to intersect the epigraph with a given point x to determine the maximum (supremum) value for the given x.

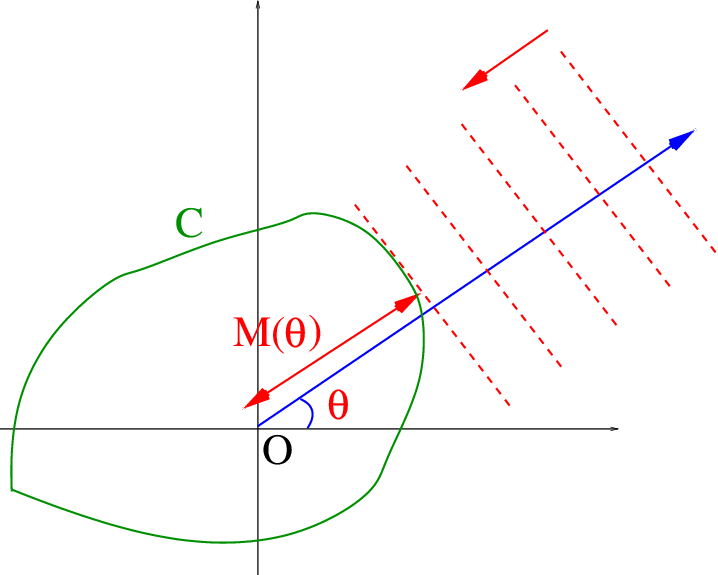

Examples

- support function of a set CSC(x)=y∈CsupyTx

The normal vector of the red dotted line is x 💡Note that C don’t have to be a convex set.

- distance to farthest point in a set Cf(x)=y∈Csup∥x−y∥

- maximum eigenvalue of symmetric matrixλmax(X)=∥y∥2=1supyTXy

where X∈Sn

💡yTXy is a linear function in x for each y.Proof

Since X∈Sn, there exists an orthonormal basis by spectral theorem.

Therefore,

Xy=Xi=1∑nyiei=i=1∑nyiλiei⇒yTXy=i=1∑nλi(yi)2First we want to show that max{yi∣i=1,…,n} is a upper-bound of yTXy

Without loss of generality, max{λi∣i=1,…,n}=λk

i=1∑nλi(yi)2≤λk(i=1∑n(yi)2)≤λkGiven ϵ>0, we want to show that there exists y∗ such that

λk−ϵ<y∗TXy∗<λkwhere ∥y∗∥2=1

Without loss of generality, λl is the second largest eigenvalue (k=l)

Take yl=2(λk−λl)ϵ,yk=2(λk−λl)2(λk−λl)−ϵ,yj=0 if j=k,l

Then,

i=1∑nyi=1Moreover,

i=1∑nλi(yi)2=λl2(λk−λl)ϵ+λk2(λk−λl)2(λk−λl)−ϵ=2(λk−λl)λlϵ+2λk(λk−λl)−λkϵ=λk−2ϵTherefore,

λmax(X)=∥y∥2=1supyTXy

Minimization

We have seen that the maximum and supremum of an arbitrary family of convex functions is convex. It turns out the some special forms of minimization also yields convex functions.

if f(x,y) is convex in (x,y) and C is a convex set, then

where g(x)>−∞ for all x

is convex.

We have to compare the condition for the point-wise supremum case. Note that f(x,y) is convex in (x,y). In point-wise supremum case, it is enough to satisfy only x

Proof

Let x1,x2∈dom g,θ≥0

For given ϵ>0, ∃y1,y2∈C such that

g(x1)<f(x1,y1)<g(x1)+ϵ/2g(x2)<f(x2,y2)<g(x2)+ϵ/2Therefore,

g(θx1+(1−θ)x2)=y∈Cinff(θx1+(1−θ)x2,y)≤f(θx1+(1−θ)x2,θy1+(1−θ)y2)≤f(θ(x1,y1)+(1−θ)(x2,y2))≤θf(x1,y1)+(1−θ)f(x2,y2)≤θg(x1)+(1−θ)g(x2)+ϵSince ϵ is arbitrary,

g(θx1+(1−θ)x2)≤θg(x1)+(1−θ)g(x2)Therefore, g is a convex function.

💡The fundamental idea is that we want to use convexity of f that makes slightly bigger than we want. We can control the amount of its value by using the definition of infimum.

Epigraph interpretation

Assume the infimum over y∈C is attained for each x (i.e. {f(x,y)∣y∈C} is closed set for each x). We have

Since the projection of a convex set on some of its components is convex, epi g is a convex set.

Example

- f(x,y)=xTAx+2xTBy+yTCy with[ABTBC]⪰0,C≻0

We can express g(x)=infyf(x,y) as

g(x)=xT(A−BC†BT)xwhere C† is the pseudo-inverse of C. Since g is convex, A−BC†BT⪰0

If C is invertible, then A−BC−1BT is called the

Schur complement ofCin the matrix[ABTBC]💡A convex quadratic function and if minimize that function over some variables, the result is quadratic form.Shur's Complement유도방법 아래 링크의 1-2페이지 자료를 확인한다. 블록 행렬을 연립방정식 형태로 정리하고 해를 구함으로써 그 형태를 자연스럽게 이해할 수 있다. (link1) (link2)http://ranking.uos.ac.kr/blog_page/post_src/post20231031.html

- distance to a set : dist(x,S)=infy∈S∥x−y∥ is convex if S is convex.

Composition with scalar functions

composition of g:Rn→R and h:R→R

f is convex if

- g convex, h convex, h~ non-decreasing

- g concave, h convex, h~ non-increasing

where h~ is a extended value extension

If we assume that n=1 and twice differentiable for g,h we can get an intuition

Think about this example,

This function is trivially increasing. However,

this function is not a increasing function.

Interpretation of h~

To say that h~ is nondecreasing means that for any x,y∈R (x<y), we have h~(x)≤h~(y).

That means y∈dom h⇒x∈dom h

Therefore, the domain of h extends infinitely in the negative direction; it is either R or an interval like (−∞,a) or (∞,a]

Similarly, h~ is non-increasing means that the domain of h extends infinitely in the positive direction; it is either R or an interval like (a,∞) or [a,∞)

Proof

Let g is convex, h is convex, h~ is nondecreasing and x,y∈dom f,θ≥0.

Since x,y∈dom f, x,y∈dom g

Moreover we already know that g is convex,

g(θx+(1−θ)y)≤θg(x)+(1−θ)g(y)Similarly since x,y∈dom f,

g(x),g(y)∈dom h⇒θg(x)+(1−θ)g(y)∈dom h (since dom h is convex set)⇒g(θx+(1−θ)y)∈dom h (since h~ is non-decreasing)Since h~ is non-decreasing,

h(g(θx+(1−θ)y))≤h(θg(x)+(1−θ)g(y))In addition, since θg(x)+(1−θ)g(y)∈dom h and h is a convex function,

h(θg(x)+(1−θ)g(y))≤θh(g(x))+(1−θ)h(g(y))Therefore,

h(g(θx+(1−θ)y))≤θh(g(x))+(1−θ)h(g(y))Therefore h∘g is a convex function.

Examples

- expg(x) is convex if g is convex💡We already know that exponential function is convex and increasing function on R

- 1/g(x) is convex if g is concave and positive.💡We already know that 1/x is concave and non-increasing

Vector composition

composition of g:Rn→Rk and h:Rk→R

f is convex if

- gi affine, h convex

- gi convex, h convex, h~ non-decreasing in each argument

- gi concave, h convex, h~ non-increasing in each argument

where h~ is a extended value extension

Proof

Without loss of generality, assume that gi convex, h convex, h~ non-decreasing in each argument.

Let x,y∈dom f,θ≥0 (i.e. x,y∈dom gi for each i and g(x),g(y)∈dom h)

Since gi is a convex function and x,y∈dom gi

gi(θx+(1−θ)y)≤θgi(x)+(1−θ)gi(y)Since h is convex, πi(dom h) is a convex set for each i.

So,

θgi(x)+(1−θ)gi(y)∈πi(dom h),∀iTherefore,

θg(x)+(1−θ)g(y)∈dom hMoreover, h~ is non-decreasing in each argument and θgi(x)+(1−θ)gi(y)∈πi(dom h),∀i,

gi(θx+(1−θ)y)∈πi(dom h),∀iTherefore,

g(θx+(1−θ)y)∈dom hIn addition,

h(g(θx+(1−θ)y))=h(g1(θx+(1−θ)y),⋯,gk(θx+(1−θ)y))≤h(θg1(x)+(1−θ)g1(y),⋯,gk(θx+(1−θ)y))≤h(θg1(x)+(1−θ)g1(y),⋯,θgk(x)+(1−θ)gk(y))=h(θ(g1(x),⋯,gk(x))+(1−θ)(g1(y),⋯,gk(x)))=h(θg(x)+(1−θ)g(y))≤θh(g(x))+(1−θ)h(g(y)) (since h is convex)Therefore h∘g is a convex function.

If gi is a affine function,

gi(θx+(1−θ)y)=θgi(x)+(1−θ)gi(y)gi(θx+(1−θ)y))∈πi(dom h)Therefore, there is no requirement of non-increasing or non-decreasing condition of h in this case.

We can actually interpret some convex preserving operations by using vector composition.

- adding convex function

We can interpret h as a summation of each argument. We already know that that kind of function is convex. (Actually, it is affine)

Moreover h~ nondecreasing in each argument.

Therefore, it is a convex function

- maximum values within convex functions

We can interpret h as a maximum of each argument. We already know that max function is convex. Moreover h~ is non-decreasing in each argument.

Therefore, it is a convex function.

Examples

- ∑i=1mloggi(x) is concave if gi are concave and positive💡Since we already know that log function is concave and it is non-decreasing

- log∑i=1mexpgi(x) is convex if gi are convex.💡Since we already know that log∑i=1mexpzi is a convex function and it is non-decreasing for each argument.

Perspective

the perspective of a function f:Rn→R is the function g:Rn×R→R

if f is convex, then g is convex.

Proof

(x,t,s)∈epi g⇔tf(x/t)≤s⇔f(x/t)≤s/t⇔(x/t,s/t)∈epi fSince f is a convex function, epi f is a convex sets. Moreover, perspective function preserve a convexity. So, epi g is a convex set.

Therefore, g is a convex function.

Example

- f(x)=xTx is convex, g(x,t)=xTx/t is convex for t>0

- negative logarithm : f(x)=−logx is convex; hence relative entropy (a.k.a

KL divergence)g(x,t)=tlogt−tlogx=−tlogtxis convex on R++2

- if f is convex, theng(x)=(cTx+d)f((Ax+b)/(cTx+d))

is convex on {x∣cTx+d>0,(Ax+b)/(cTx+d)∈dom f}

💡We already know that f(Ax+b) is convex

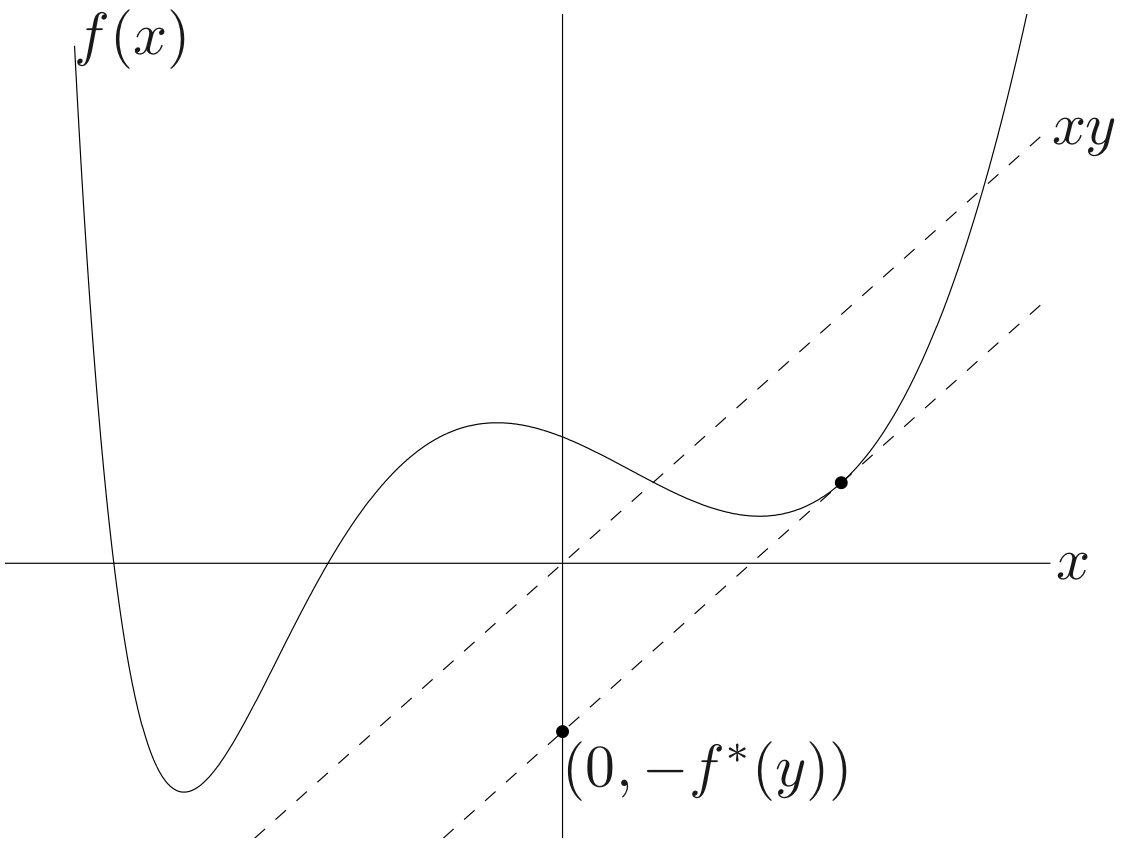

Conjugate function

the conjugate of a function f is

Let f(x) is a making cost, x is the number of product, y is the price for each product.

We want to maximize the profit. So our goal is to find the number of product maximize our benefit.

Note that f∗ is convex (even if f is not)

Proof

yTx−f(x) is affine with respect to y.

Moreover, it is the point-wise supremum of a family of convex (indeed, affine) functions of y. Therefore, it is actually a corollary of the supremum of the convex function is a convex function.

Examples

- negative logarithm : f(x)=−logxf∗(x)=x>0sup(xy+logx)={−1−log(−y)∞y<0otherwise

- strictly convex quadratic : f(x)=(1/2)xTQx with Q∈S++nf∗(y)=xsup(yTx−(1/2)xTQx)=21yTQ−1y💡Conjugate of quadratic form is the inverse matrix

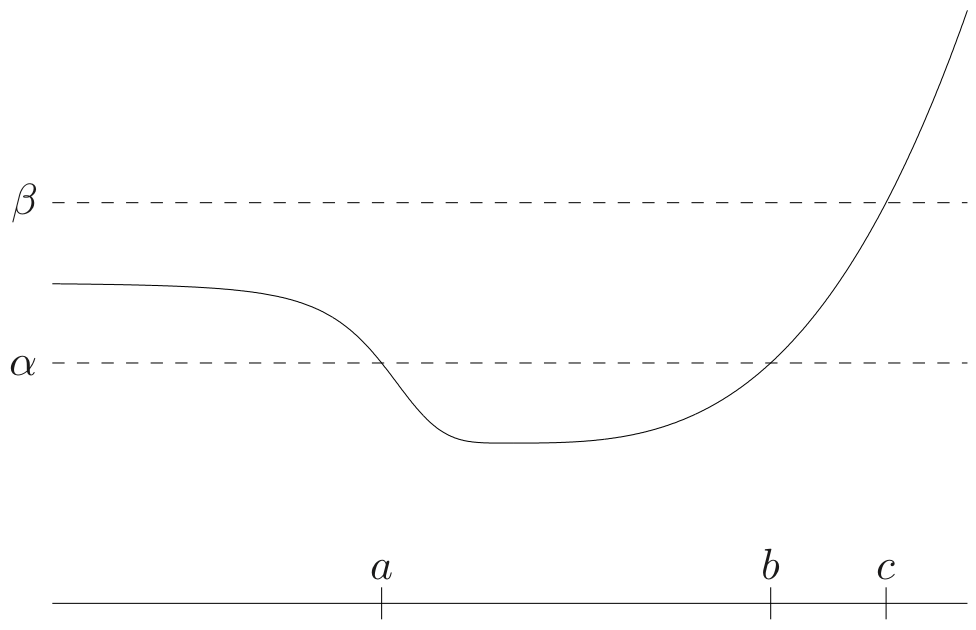

Quasi-convex functions

f:Rn→R is quasi-convex if dom f is convex and the sublevel sets

are convex for all α

A function is quasi-concave if −f is quasi-convex. A function that is both quasi-convex and quasi-concave is called quasi-linear.

Convex functions have convex sub-level sets, and so are quasi-convex.

Proof

We already know that epigraph of a convex function is a convex set. Moreover, {(x,y)∣y≤α} is a convex set.

Since the intersection of convex sets is also a convex set.

Therefore,

{(x,f(x))∣f(x)≤α,x∈dom f}is a convex set.

Moreover, we already know that projection of certain coordinate is also a convex set. Therefore,

{x∈dom f∣f(x)≤α}is a convex set.

Therefore, every convex function is a quasi-convex function.

Examples

- logx is quasi-linear on R++

- f(x1,x2)=x1x2 is quasi-concave on R++2

- linear-fractional functionf(x)=cTx+daTx+bdomf={x∣cTx+d>0}

is quasi-linear

💡Note that the sublevel set is half space

- distance ratiof(x)=∥x−b∥2∥x−a∥2dom f={x∣∥x−a∥2≤∥x−b∥2}

is quasi-convex.

Proof

We want to know whether {x∈dom f∣f(x)≤t} is a convex set or not for all a. (For simplicity, we assume that t is non-negative.)

Take g:R2→R, h1:R→R,h2:R→R as follows

h1(x)=∥x−a∥2h2(x)=∥x−b∥2g(x1,x2)=x1−tx2Since h1,h2 are convex and g~ are non-decreasing and non-increasing respectively (if t is negative, g~ are both non-decreasing), g(h1(x),h2(x)) is a convex function.

As we proved above, every convex function is also a quasi-convex funciton.

Therefore,

{x∣∥x−a∥2−t∥x−b∥2≤0}⇔{x∣∥x−b∥2∥x−a∥2≤t}is a convex set.

Since t is arbitrary,

{x∣∥x−a∥2−1∥x−b∥2≤0}⇔{x∣∥x−a∥2≤∥x−b∥2}is a convex set.

Therefore,

{x∣∥x−b∥∥x−a∥≤t and ∥x−a∥2≤∥x−b∥2}⇔{x∈dom f∣f(x)≤t}Therefore, f is a quasi-convex function.

Properties

- modified Jensen’s inequality : for quasi-convex f0≤θ≤1⇒f(θx+(1−θ)y)≤max{f(x),f(y)}

Proof

Since f is a quasi-convex function,

{t∈dom f∣f(t)≤f(x)}{t∈dom f∣f(t)≤f(y)}are a convex set.

We already know that intersection of two convex sets is also a convex set. Therefore,

{t∈dom f∣f(t)≤max{f(x),f(y)}is a convex set.

For simplicity, let’s say

A={t∈dom f∣f(t)≤max{f(x),f(y)}Trivially, x,y∈A. Therefore, for given θ≥0,

θx+(1−θ)y∈ASo,

f(θx+(1−θ)y)≤max{f(x),f(y)}

- first-order condition : Suppose f:Rn→R is differentiable with dom f is convex. Then f is quasi-convex if and only if∀x,y∈dom f,f(y)≤f(x)⇒∇f(x)T(y−x)≤0

Proof

Let f is quasi-convex. Then

A={y∈dom f∣f(y)≤f(x)}is a convex set.

Suppose ∃y∈A such that

∇f(x)T(y−x)>0Then, ∃0<ϵ<1 such that

f(x+(y−x)ϵ)>f(x)Since x,y∈A and A is a convex set,

x+(y−x)ϵ∈AIt is contradicts to the definition of A.

Therefore,

∀x,y∈dom f,f(y)≤f(x)⇒∇f(x)T(y−x)≤0We want to show that for given a

{x∈dom f∣f(x)≤a}is a convex set

- case 1 : ∃x∈dom f such that f(x)=a

By assumption,

y∈{x∈dom f∣f(x)≤a}⇒∇2f(x)T(y−x)≤0⇒y∈dom f∩{y∣∇2f(x)T(y−x)≤0}Since ∇2f(x)T(y−x) is affine with respect to y, {y∣∇2f(x)T(y−x)≤0} is a convex set.

Therefore {x∈dom f∣f(x)≤a} is a convex set.

- case 2 : ∄x∈dom f such that f(x)=a

Since f is differentiable, f is continuous for every x. Therefore, there are only two cases.

- f(x)>a,∀x

In this case, there is no element in sub-level set. Therefore, it is a vacuously true to satisfy the convexity.

- f(x)<a,∀x

In this case, {x∈dom f∣f(x)≤a}=dom f. We already know that dom f is a convex set.

Therefore {x∈dom f∣f(x)≤a} is a convex set.

- f(x)>a,∀x

- case 1 : ∃x∈dom f such that f(x)=a

💡Taking into account that the sublevel set of a quasi-convex function is a convex set, one can understand the above concept as a restriction of the comparison region.

Log-concave and log-convex functions

a positive function f is log-concave if logf

for 0≤θ≤1

f is log-convex if logf is convex.

Examples

- powers : xa on R++ is log-convex for α≤0, log-concave for α≥0

proof

alog(θx+(1−θ)y)≥aθlog(x)+a(1−θ)log(y)if a≥0,

log(θx+(1−θ)y)≥θlog(x)+(1−θ)log(y)the above function is log-concave.

if α≤0,

log(θx+(1−θ)y)≤θlog(x)+(1−θ)log(y)the above function is log-convex.

- many common probability densities are log-concave (ex : normal dist)f(x)=(2π)ndetΣ1e−21(x−xˉ)TΣ−1(x−xˉ)💡If we take logarithm for above function, it is just a quadratic function.

- cumulative Gaussian distribution Φ is log-concave.Φ(x)=2π1∫−∞xe−u2/2du

Properties

- twice differentiable f with convex domain is log-concave if and only if f(x)∇2f(x)⪯∇f(x)∇f(x)T

for all x∈dom f

or equivalently

∇2f(x)⪯f(x)∇f(x)∇f(x)Tfor all x∈dom f

The condition for concave function is 0 for the right side of the above inequality. In log-concave case, the hessian matrix is less than equal to the rank-one matrix. That means we can have at most one positive eigenvalue of the hessian.

💡For a rank-one matrix A=uvT, there is exactly one non-zero eigenvalue, and it is equal to the dot product uTv

- product of log-concave functions is log-concave💡basically related to the sum of the concave function is concave.

- sum of log-concave functions is not always log-concave💡mixture of log-concave distribution is not always log-concave

- integration : if f:Rn×Rm→R is log-concave, theng(x)=∫f(x,y)dy

is log-concave.

Prékopa–Leindler inequalityIn mathematics, the Prékopa–Leindler inequality is an integral inequality closely related to the reverse Young's inequality, the Brunn–Minkowski inequality and a number of other important and classical inequalities in analysis. The result is named after the Hungarian mathematicians András Prékopa and László Leindler.[1][2] https://en.wikipedia.org/wiki/Prékopa–Leindler_inequality

https://en.wikipedia.org/wiki/Prékopa–Leindler_inequality

Consequences of integration property

- convolution f∗g of log-concave functions f,g is log-concave(f∗g)(x)=∫f(x−y)g(y)dy

- if C⊂Rn convex and y is a random variable with log-concave pdf thenf(x)=Prob(x+y∈C)

is log-concave

Proof

Write f(x) as integral of product of log-concave functions

f(x)=∫g(x+y)p(y)dywhere

g(u)={10u∈Cu∈/Cp is pdf of y

Convexity with respect to generalized inequalities

Suppose K⊂Rm is a proper cone with associated generalized inequality ⪯K. We say f:Rn→Rm is K-convex if for all x,y, and 0≤θ≤1,

The function is strictly K-convex if

for all x=y and 0<θ<1

소중한 공감 감사합니다